How AI Can Be Used in Medical Software — Practical Guide for Decision-Makers

Artificial intelligence (AI) is reshaping medical software — from faster, more accurate diagnoses to operational efficiencies, personalized medicine, and smarter patient engagement. But the promise comes with unique constraints: clinical validation, patient safety, data governance and regulatory oversight. This guide explains where AI adds the most value, how to build it responsibly into medical products, how to validate and measure impact, and how to operationalize AI safely in regulated healthcare environments.

1. The promise of AI in medicine — why now?

Three technological and social trends have made AI adoption in medical software practical and urgent:

-

Data scale and maturity: EHRs, high-resolution imaging, genomic sequences, and continuous device telemetry produce rich datasets suitable for statistical learning.

-

Model capability: Advances in deep learning, transfer learning and transformers enable pattern recognition at human-level (or sometimes beyond) in imaging, NLP for clinical text, and sequence modeling for time-series health data.

-

Operational tooling: MLOps platforms, cloud compute, and standardized data formats (FHIR, DICOM) simplify integration and deployment into clinical workflows.

Together these trends mean AI can move from proof-of-concept labs to production systems that assist clinicians, improve outcomes, and reduce costs — but only when combined with rigorous validation, safety-first design and clear governance.

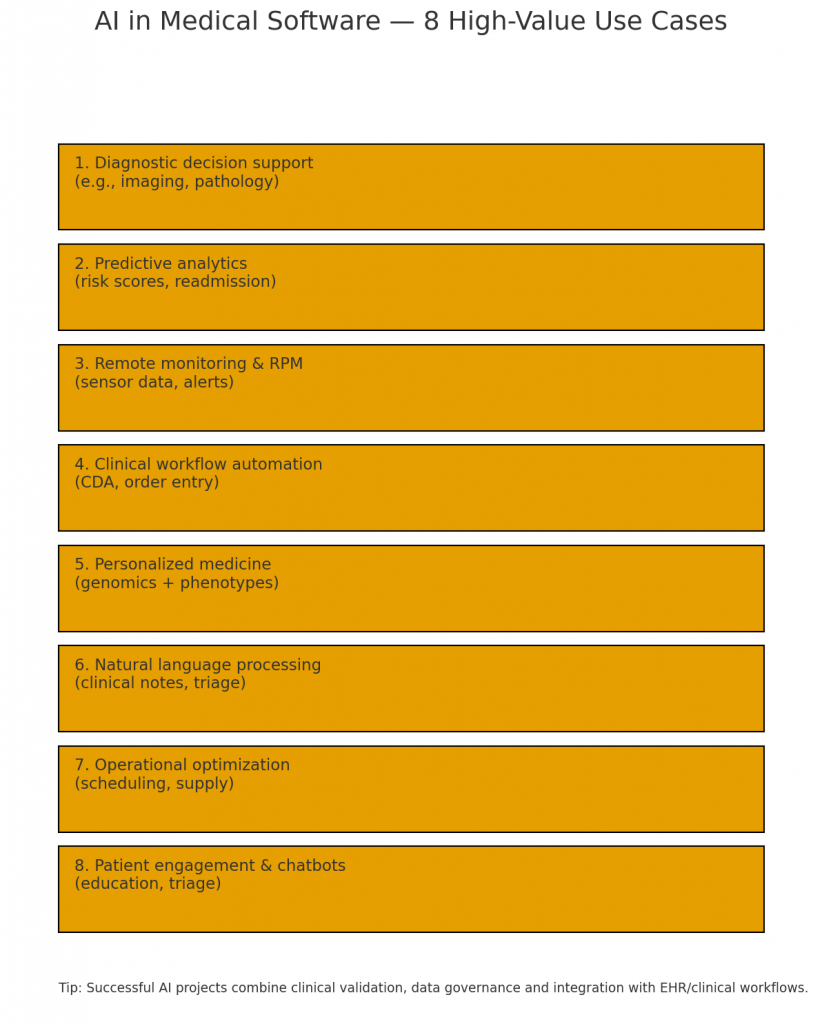

2. High-value clinical use cases

AI’s clearest early wins in clinical settings are where (a) large labeled datasets exist or can be curated, (b) measurable outcomes are available, and (c) AI supports — not replaces — clinician decisions. Key clinical use cases include:

2.1 Diagnostic decision support (imaging and pathology)

Computer vision models can triage images (X-ray, CT, MRI, retinal scans) and flag anomalies for radiologist review. For pathology slides, models detect regions of interest and estimate grading metrics. Example value: faster triage of urgent cases and increased sensitivity for subtle findings.

2.2 Predictive risk modeling

ML models predict readmission risk, sepsis onset, or deterioration in hospitalized patients by combining vitals, labs, medications and history. Hospitals use these predictions to prioritize monitoring and intervene earlier.

2.3 Genomics and personalized medicine

Variant interpretation and polygenic risk scoring can guide drug choice, dosing and screening intervals. AI helps integrate genomic data with EHR phenotypes to identify treatment responders.

2.4 Clinical decision pathways & order set optimization

AI can suggest evidence-based order sets and checklists tailored to a patient’s profile—reducing variation and aligning care with guidelines.

2.5 Pathology and microscopy automation

Beyond imaging, models can quantify cell types, mitotic rates, and other histopathological features in digitized slides to support pathologists.

2.6 Rare disease detection and phenotyping

By aggregating subtle signals across records, AI helps detect patterns suggestive of rare diseases, shortening diagnostic odysseys.

These clinical uses show the combination of technical feasibility and clinical importance that makes them top priorities for product teams.

3. Operational and administrative applications

AI’s ROI in healthcare often comes faster from operations than from clinical breakthroughs. Examples include:

3.1 Scheduling and capacity optimization

Predictive models forecast no-shows, clinic demand spikes, and staff availability. Optimized scheduling reduces wait times and improves resource utilization.

3.2 Revenue cycle and coding automation

NLP models extract diagnosis and procedure codes from clinical notes, accelerating billing and reducing denied claims.

3.3 Supply chain and inventory management

Forecasting demand for high-value supplies, optimizing reorder points, and detecting anomalies in consumption patterns reduce costs and outages.

3.4 Clinical documentation assistance

Speech-to-text and NLP summarize encounters, extract structured data for registries, and reduce clinician administrative burden — improving satisfaction and throughput.

Operational wins typically require integrating predictions with workflows (scheduling UI, billing systems), and building clear human-in-the-loop approvals to prevent automation errors from propagating.

4. Patient-facing experiences and digital therapeutics

AI increases accessibility and personalization in patient-facing software:

4.1 Remote Patient Monitoring (RPM) and home care

Models analyze continuous sensor data (wearables, home devices) to detect deterioration or nonadherence, triggering care manager interventions.

4.2 Chatbots and triage assistants

Conversational AI performs symptom triage, schedules appointments, or routes patients to urgent care based on algorithmic risk thresholds.

4.3 Personalized education and engagement

Adaptive content and reminders informed by behavior models improve medication adherence and chronic disease management.

4.4 Digital therapeutics

AI-driven interventions (e.g., adaptive CBT apps) can personalize therapy content and dose, improving outcomes in behavioral health and chronic disease.

Patient-facing AI often needs to be conservative in risk—prioritize safety, explainability, and clear escalation paths to human providers.

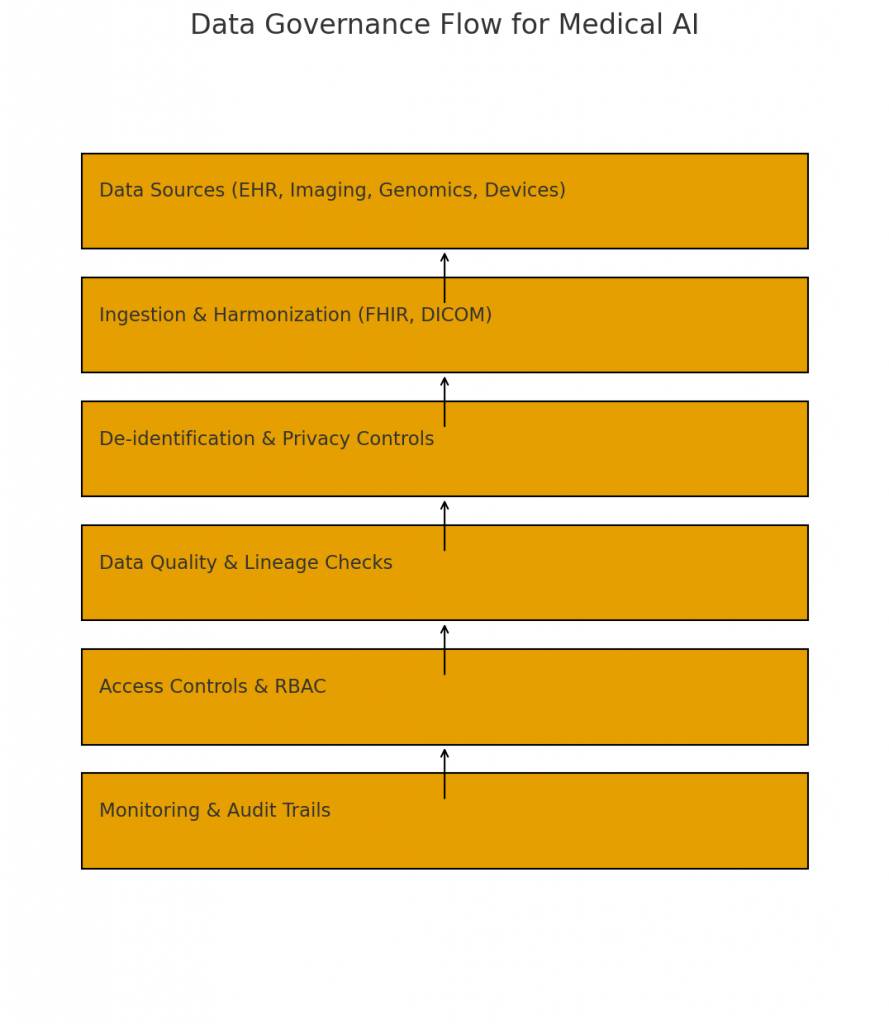

5. Foundations: data, interoperability and standards

AI is only as good as its data. Foundational considerations include:

5.1 Data quality and provenance

Medical data is noisy and heterogenous. Invest in data cleaning, de-duplication, harmonization and lineage tracking. Maintain provenance metadata (where data came from and who approved it).

5.2 Standards and interoperability

Use standards like FHIR for clinical data exchange and DICOM for imaging. Semantic consistency (LOINC, SNOMED CT, ICD) enables models trained on one dataset to generalize.

5.3 Labeling & ground truth

High-quality labels are pivotal. For imaging tasks, get multiple expert annotations and adjudication to handle inter-rater variability. For outcomes, define endpoints precisely and consider censoring and competing risks.

5.4 Bias & representativeness

Check datasets for demographic imbalances and clinical practice variations. A model trained in one health system may underperform in another — plan external validation.

5.5 Privacy & de-identification

Apply robust de-identification and consider synthetic data where possible. Use privacy-preserving techniques (federated learning, differential privacy) to mitigate data sharing risks.

6. Model design: explainability, uncertainty, and safety

Clinical settings demand explainable and calibrated models with clear failure modes.

6.1 Explainability and transparency

Simple models (rule-based, logistic regression) are often more interpretable and may be preferable when performance is comparable. For deep models, use explainability methods (SHAP, saliency maps) and present them in clinician-friendly ways.

6.2 Uncertainty quantification

Provide confidence intervals, probability estimates and fallback rules when uncertainty is high. Use abstention policies (flag for human review) for out-of-distribution inputs.

6.3 Safety constraints & failure modes

Design guardrails: whitelist/blacklist checks, sanity checks on inputs, rate limiters, and automated rollbacks if abnormal patterns appear. Map potential hazards using techniques like FMEA (Failure Mode and Effects Analysis).

6.4 Human-in-the-loop workflows

AI should assist clinicians, not replace key judgments. Present actionable insights, allow clinicians to override recommendations, and record overrides for continuous improvement and auditing.

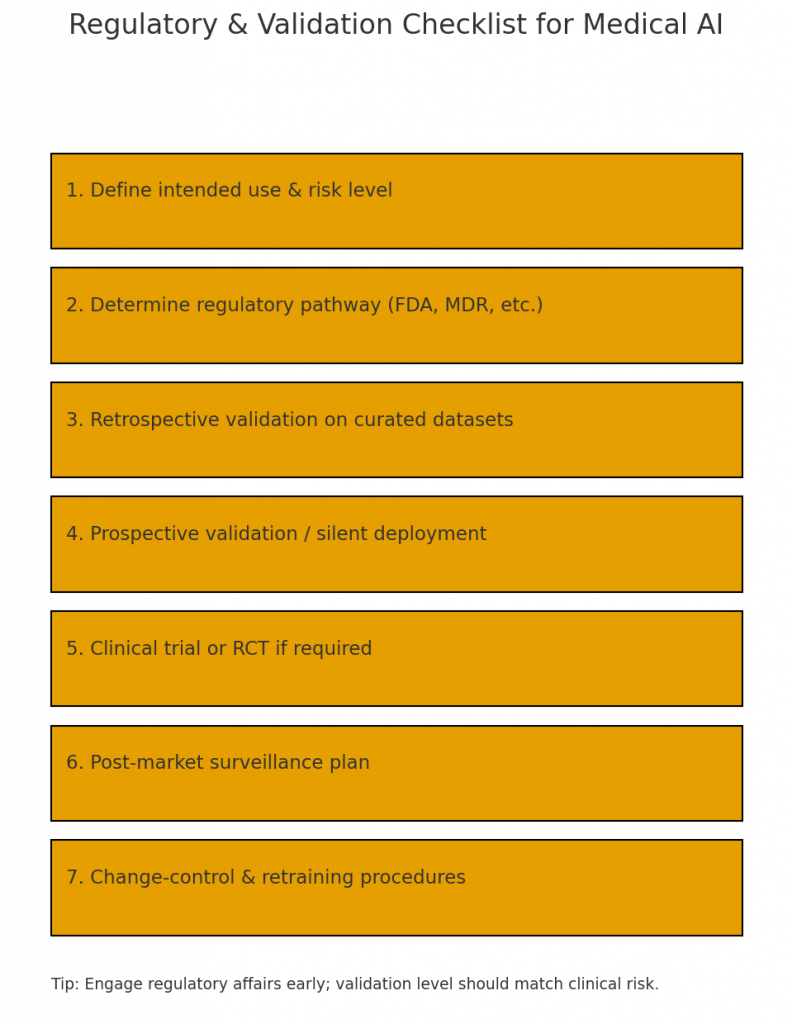

7. Clinical validation, trials, and regulatory pathways

AI in medical software often constitutes a medical device when it influences clinical decision-making. Regulatory and validation steps are critical.

7.1 Levels of clinical evidence

Evidence ranges from retrospective validation on held-out datasets to prospective clinical studies and randomized controlled trials (RCTs). Choose the level based on risk: higher-risk applications need stronger evidence.

7.2 Regulatory frameworks

Regulatory classification depends on jurisdiction and intended use. In the U.S., the FDA regulates SaMD (Software as a Medical Device) and has specific guidance for AI/ML-based devices. The EU MDR and UK regulations have their own paths. Engage regulatory affairs early to determine needed submissions (510(k), De Novo, CE marking).

7.3 Reproducible evaluation

Define primary endpoints, validation cohorts, performance metrics (sensitivity, specificity, AUC), and calibration checks. Prefer multi-center validation to test generalizability.

7.4 Real-world performance monitoring

Post-market surveillance and model updates need a controlled process. The FDA recommends a monitoring plan for model drift, retraining triggers, and documentation of changes.

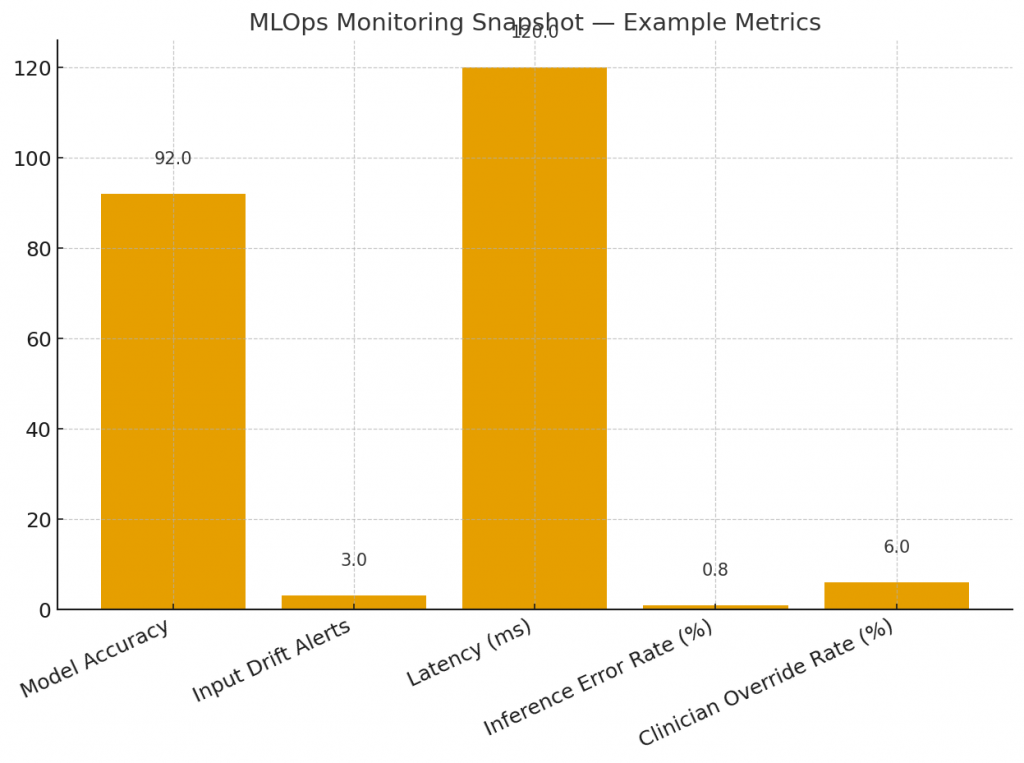

8. Deployment: MLOps, monitoring, and lifecycle management

Building models is just the start — production-grade medical AI requires robust operational infrastructure.

8.1 MLOps for medical AI

Implement pipelines for training, testing, reproducibility and deployment. Version data, code, and model artifacts. Use CI/CD for model tests (unit, integration, performance).

8.2 Inference architecture and latency

Choose on-device vs cloud inference based on latency, connectivity, and privacy. For imaging, GPU-backed inference may be necessary; for triage chatbots, autoscaling APIs suffice.

8.3 Monitoring & drift detection

Track input distribution shifts, performance metrics, and clinician interaction outcomes. Implement automated alerts when drift exceeds thresholds and safe rollback mechanisms.

8.4 Audit trails and explainability in logs

Log inputs, outputs, explanations and clinician actions in an auditable manner, with access controls to protect PHI.

8.5 Update governance & change control

Define a model change protocol: retraining pipelines, validation gates, sign-offs, and release notes. Maintain a registry of model versions and their performance.

9. Ethical, legal and privacy considerations

AI in medicine raises questions beyond technical performance.

9.1 Consent and transparency

Inform patients when AI-supported decisions are used. For care-facing tools, include clinician-facing explanations and patient-facing disclosures where needed.

9.2 Equity and fairness

Measure model performance across demographic subgroups and adjust data collection or model weighting to reduce disparities. Involve community stakeholders in design and validation.

9.3 Liability and accountability

Clarify responsibility between vendor, provider organization and clinicians. Build systems that allow clinicians to exercise judgment, and document decision rationales for auditability.

9.4 Data governance and access control

Limit who can query models, access logs, or change parameters. Apply least-privilege principles and encryption at rest and in transit.

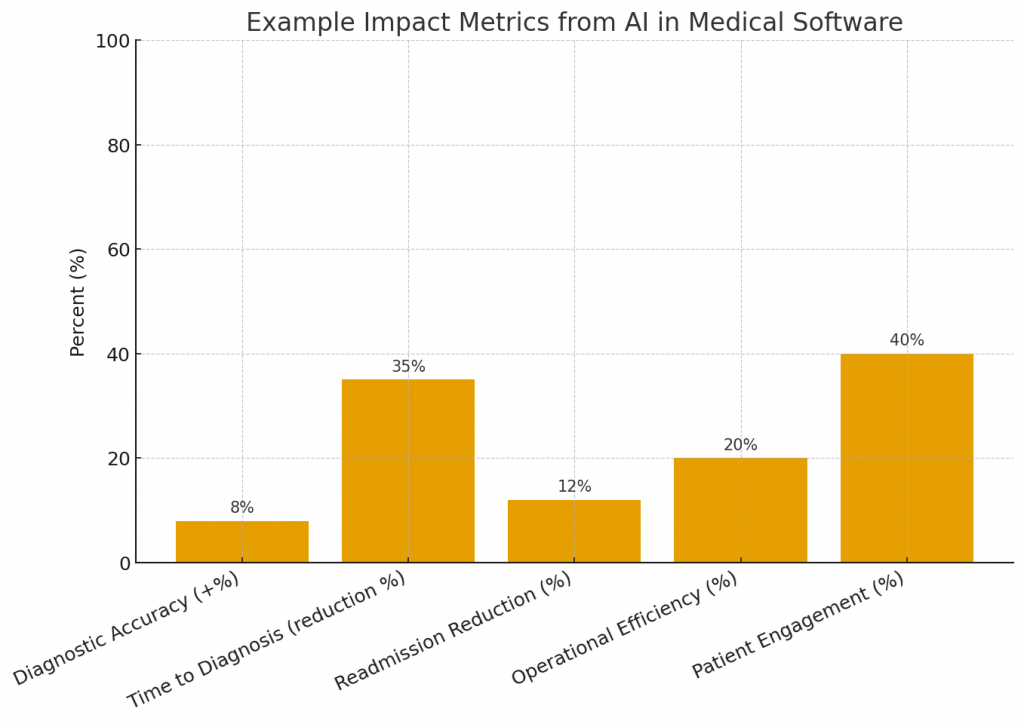

10. Measuring impact: KPIs and ROI

Define business and clinical KPIs prior to deployment. Examples:

Clinical KPIs

-

Diagnostic sensitivity/specificity improvements.

-

Time to diagnosis or intervention.

-

Reduction in adverse events or readmissions.

Operational KPIs

-

Reduction in clinician documentation time.

-

Reduction in appointment no-shows / better scheduling utilization.

-

Faster throughput in imaging/triage pipelines.

Financial ROI

-

Cost saved per avoided adverse event.

-

Revenue preserved through improved coding and reduced denials.

-

Efficiency gains per clinician FTE.

Create dashboards for each KPI and measure both short-term operational gains and long-term clinical outcomes.

11. Implementation roadmap — from pilot to production

A practical phased roadmap reduces risk and delivers early value.

Phase 0: Strategy & use-case prioritization

Workshops with clinical and operational stakeholders to pick high-impact, low-risk initial use cases.

Phase 1: Data readiness & prototype (4–12 weeks)

Assemble datasets, label small cohorts, and build a prototype model. Validate retrospectively and iterate.

Phase 2: Silent-mode deployment & prospective validation (3–6 months)

Run the model in parallel with clinicians (no impact on care) to gather prospective performance and measure clinician alignment.

Phase 3: Pilot with constrained scope (6–12 months)

Deploy to a subset of clinics or users, integrate workflow changes, and gather RCT-level evidence if required.

Phase 4: Scale & integrate (ongoing)

Expand across departments, standardize MLOps, monitor real-world performance, and maintain retraining cadence.

Throughout, maintain strong clinical governance, risk registers, and regulatory documentation.

12. Case studies and practical examples

Below are concise, anonymized examples of AI applied effectively in medical software.

Case A: Imaging triage in a regional hospital network

Problem: Long radiology backlog causing delayed care.

Solution: An AI triage model flagged high-risk chest X-rays for priority review. Implementation included silent-mode validation and clinician feedback loops.

Outcome: Priority cases were reviewed 40% faster, and radiologist workflow efficiency improved without loss of diagnostic accuracy.

Case B: Readmission risk model for a large health system

Problem: High 30-day readmission rates and penalties.

Solution: A time-series model using vitals, labs and social determinants predicted patients at high risk for readmission, enabling targeted post-discharge care.

Outcome: Readmissions dropped by 10% among flagged patients after a care management intervention.

Case C: NLP for clinical documentation and coding

Problem: Clinician burnout from manual coding.

Solution: An NLP pipeline extracted structured diagnosis and procedure codes from notes, with a human review step for edge cases.

Outcome: Billing cycle time reduced by 30% and coding accuracy improved.

Each case emphasized clinical partnership, iterative validation and a conservative deployment profile.

13. Common pitfalls and how to avoid them

AI projects in healthcare fail for predictable reasons — and these can be mitigated.

Pitfall 1: Poor data quality

Fix: Build data profiling and cleaning early; invest in ETL and mapping of clinical concepts.

Pitfall 2: Ignoring clinician workflows

Fix: Co-design with clinicians. Embed outputs where they act (order entry, image viewer), not in separate dashboards.

Pitfall 3: Overfitting to local practice

Fix: Multi-site validation and external test cohorts before scaling.

Pitfall 4: Lack of governance for model updates

Fix: Define retrain triggers, testing suites, and approval gates.

Pitfall 5: Neglecting monitoring and maintenance

Fix: Implement drift detection and continuous performance tracking.

Avoiding these pitfalls requires organizational commitment, not merely engineering.

14. Future trends and where to watch next

AI in medical software will evolve along several axes:

-

Foundation models in healthcare: Large multimodal models that combine text, images, genomics and time-series signals may enable broader clinical assistants, but will require guardrails for hallucination and safety.

-

Federated learning and privacy-preserving methods: As institutions seek cross-site models without sharing raw data, federated approaches will mature.

-

Regulatory clarity for adaptive models: Regulators will define pathways for models that learn post-deployment, including change control and continuous validation frameworks.

-

Digital twins and simulation: Patient digital twins could be used for personalized treatment simulations and surgical planning.

-

Edge inference and wearables integration: Continuous monitoring with on-device inference will enable earlier detection without privacy trade-offs.

Product teams should watch these trends and plan modular architectures that allow new model classes to be plugged in safely.

15. Conclusion — building AI medical software that clinicians and patients trust

AI can profoundly improve medical care and operations, but success depends on more than model accuracy. High-impact medical AI requires careful collection and labeling of clinical data, explainable models with uncertainty measures, rigorous clinical validation, robust MLOps, and explicit ethical and regulatory governance. Start with well-scoped pilots, co-design with clinicians, invest in monitoring and governance, and scale only after multi-site validation.

When done right, AI becomes an amplifying tool — helping clinicians see signals they might otherwise miss, freeing time for patient care, and delivering operational efficiencies that let health systems serve more people better.