- Services

- Artificial Intelligence Development

- Deep Learning & Neural Network Development Services

- Professional Machine Learning Development Services

- Enterprise Computer Vision Development Services

- Enterprise Natural Language Processing Development Services

- Chatbot & Conversational AI Development Services for Business

- Enterprise Computer Vision Solutions For Healthcare

- Transformative Healthcare AI Development

- Retail & E-commerce AI Solutions for Personalization & Growth

- AI Integration & MLOps Development Services

- AI Agent Development & Intelligent Automation

- Generative AI Solutions

- Outsourced Product Development

- Custom Software Development

- Software Customization & Integration

- Mobile App Development

- Custom Application Development

- Software Architecture Consulting

- Enterprise Application Development

- AI-Powered Documentation Services

- Product Requirements Document Services

- Artificial Intelligence Development

- Industries

- Healthcare Software Development

- Telemedicine Software Development

- Medical Software Development

- Electronic Medical Records

- EHR Software Development

- Remote Patient Monitoring Software Development

- Healthcare Mobile App Development Services

- Medical Device Software Development

- Healthcare Mobile App Development Services

- Patient Portal Development Services

- Practice Management Software Development

- Healthcare AI/ML Solutions

- Healthcare CRM Development

- Healthcare Data Analytics Solutions Development

- Hospital Management System Development | Custom HMS & Healthcare ERP

- Mental Health Software Development Services

- Medical Billing & RCM Software Development | Custom Healthcare Billing Solutions

- Laboratory Information Management System (LIMS) Development

- Clinical Trial Management Software Development

- Pharmacy Management Software Development

- Finance & Banking Software Development

- Retail & Ecommerce

- Fintech & Trading Software Development

- Online Dating

- eLearning & LMS

- Cloud Consulting Services

- Healthcare Software Development

- Technology

- Products

- About

- Contact Us

How Software Companies Can Use CodeMender

CodeMender-style systems combine advanced code-aware language models with traditional program analysis to find, generate, and validate fixes for software defects and vulnerabilities. For software companies, these systems offer the potential to dramatically reduce the time between identification and remediation, improve engineering productivity, and harden products at scale — but only when integrated with careful governance, robust validation, and well-designed pipelines. This article explains, step by step, how software companies can adopt and operationalize CodeMender — from selection and pilot to production rollouts, governance, metrics, and long-term maintenance.

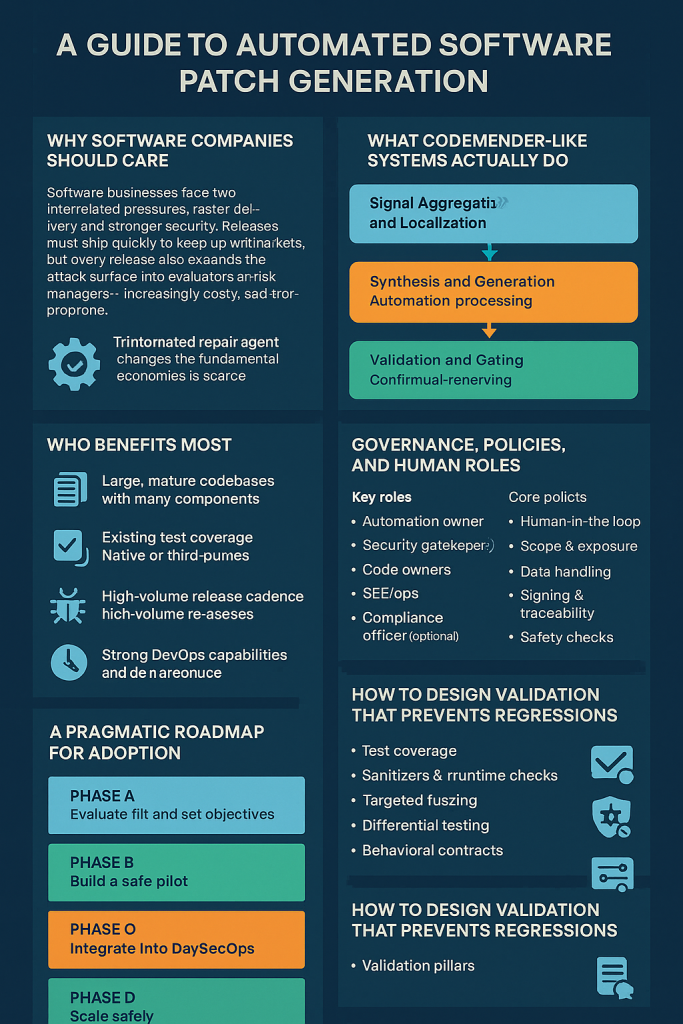

Why software companies should care

Software businesses face two interrelated pressures: faster delivery and stronger security. Releases must ship quickly to keep up with markets, but every release also expands the attack surface. Traditional manual workflows for triage and patching are increasingly costly, slow, and error prone. An automated repair agent changes the fundamental economics: instead of human teams doing repetitive triage work, the agent synthesizes candidate fixes and returns well-documented, validated patches for human review. That transforms the role of engineering and security teams from code fixers into evaluators and risk managers — a more strategic and higher-leverage role.

But the promise comes with responsibilities. Automated patching can introduce regressions, confuse ownership, or be misused if not properly governed. The rest of this article is a practical blueprint for realizing benefits while managing risks.

What CodeMender-like systems actually do (in operational terms)

Think of CodeMender as a pipeline composed of three coordinated layers:

-

Signal aggregation and localization: The system ingests static analysis, dynamic tests, fuzzing output, crash reports, telemetry, and dependency scanners. Its goal is to reduce noise and identify a tight “patch surface” — the minimal set of lines or functions most likely responsible for the defect.

-

Synthesis and generation: A code-aware AI generates candidate edits. Crucially, it operates with structural awareness (AST edits, not plain text diffs), proposes tests when useful, and annotates rationale and expected behavior.

-

Validation and gating: Candidate patches are validated automatically in a sandbox: run full test suites, extended fuzzing against repro cases, sanitizer checks, and differential behavior comparisons. Only patches that clear these gates are presented to humans as ready-to-review PRs.

Operational success depends less on the model itself and more on the richness of the signals ingested and the rigor of validation gates.

Who benefits most — product and engineering profiles

Not every team will see the same gain. The most immediate wins are for organizations that share these characteristics:

-

Large, mature codebases with many components and repeated patterns or copy-paste bugs.

-

Existing test coverage and at least some unit/integration tests on core modules.

-

Native or third-party libraries where memory or parsing bugs are likely but human expertise is scarce.

-

High-volume release cadence where triage and fix cycles are a bottleneck for security and reliability.

-

Strong DevOps capabilities (CI/CD, monitoring, automated tests) — a prerequisite for safe automation.

Smaller startups can benefit too, but the return on investment is higher when there’s a steadfast test and validation culture to catch regressions.

A pragmatic roadmap for adoption

Below is an actionable roadmap covering evaluation, pilot, integration, governance, and scaling.

Phase A — Evaluate fit and set objectives

-

Define clear goals. Examples: reduce median time to patch by 50%, reduce repeat vulnerability types by 70%, or allow security engineers to review 3× candidate patches per week instead of writing them manually.

-

Select target modules. Choose noncritical services with solid tests and known classes of frequent bugs (parsers, image handling, auth middleware).

-

Assess infra readiness. Confirm CI capacity for heavy validation, sandboxing capabilities, artifact storage, and model hosting options (on-prem vs. cloud).

Phase B — Build a safe pilot

-

Narrow the scope. One team, one service, or one library.

-

Instrument the repo. Add fuzz harnesses and targeted unit tests for the most fragile code paths. Ensure sanitizers are runnable for native code.

-

Run a detection cycle. Produce a corpus of real findings (static and dynamic).

-

Synthesize candidate patches via the chosen system (or an LLM + toolchain emulation).

-

Validate rigorously. Use a repeatable, gated CI flow: compile → tests → sanitizers → fuzz → differential checks. Only validated candidates get surfaced.

-

Human review and controlled merge. Require explicit approvals from assigned reviewers and maintainers. Merge into a canary or staging environment first.

-

Measure and iterate. Track MTTP, validated patch ratio, regression rates, and reviewer time savings. Decide to expand if metrics are positive.

Phase C — Integrate into DevSecOps

-

Embed agent runs into CI for pull requests and scheduled scans. Use the agent to synthesize fixes for prioritized findings, not for every low-severity alert.

-

Automate evidence collection so each proposed PR contains crash repros, fuzz logs, sanitizer outputs, and a summary of code changes.

-

Create merge policies. Automation never merges; it opens PRs. Require at least one security review and one code owner approval.

-

Train teams on reviewing AI-assisted patches, reading validation artifacts, and writing tests that capture business invariants.

-

Maintain rollback plans and fast rollback scripts for recently merged automated fixes.

Phase D — Scale safely

-

Expand scope gradually to more services and libraries while preserving pilot success criteria.

-

Invest in model governance (if hosting models internally) and data provenance.

-

Improve observability for post-merge behavior: error rates, latency, user metrics, and security telemetry.

-

Automate metrics dashboards to monitor patch outcomes and surface any suspicious patterns in proposed patches.

Implementation details: technical architecture and CI pipeline

A robust implementation blends model reasoning, tooling, and infrastructure. Below is a template architecture and an example CI pipeline.

Architectural components

-

Ingestion & prioritization service: collects SAST results, fuzz crashes, DAST findings, and runtime errors; ranks issues by exploitability and exposure.

-

Patch generation engine: code-aware model + AST transformer. Produces candidate diffs and optional tests.

-

Validation cluster: isolated runners for unit/integration tests, sanitizers, and long fuzz campaigns.

-

Artifact store: secure storage for crash dumps, logs, and test results for auditability.

-

PR automation: a bot that opens PRs with attached evidence and metadata.

-

Governance layer: RBAC, traceability, and policy enforcement preventing direct merges by automation.

-

Monitoring & rollback: observability into post-merge behavior and scripts for fast rollback.

Example CI flow (job sequence)

-

Detect job: collects SAST/DAST/fuzzer outputs and creates a prioritized queue.

-

Synthesize job: invokes CodeMender to generate a candidate patch in an isolated environment and produces a PR branch.

-

Pre-validate job: fast checks — compile, lint, unit tests.

-

Validate job: extended fuzz targeted at repro cases, sanitizers, and integration tests.

-

LLM-judge job (optional): independent model or ruleset inspects the diff for suspicious patterns (use of unsafe functions, new external calls).

-

PR generation: if all gates pass, the bot opens a PR with evidence, labels, and reviewers.

-

Human review: assigned reviewers examine tests, logs, and the patch; they accept or reject.

-

Canary deploy & monitor: post-merge to canary environment, monitor, then full rollout.

Emphasize isolation of jobs that run generated code. Use ephemeral VMs or containers with network egress restricted and no production secrets.

Governance, policies, and human roles

Automation shifts responsibilities but doesn’t eliminate accountability. Define formal roles and policies.

Key roles

-

Automation owner: maintains pipeline, monitors model health, and handles infra.

-

Security gatekeeper(s): security engineers responsible for triaging automated PRs and approving fixes.

-

Code owners: maintainers who validate design and compatibility.

-

SRE/ops: responsible for rollout, monitoring, and rollback.

-

Compliance officer (optional): ensures audit requirements are fulfilled for regulated environments.

Core policies

-

Human-in-the-loop: Automation can open PRs but cannot merge to protected branches.

-

Scope & exposure: Define which repositories, packages, and services are in or out.

-

Data handling: If code is processed off-prem, require contractual protections; prefer on-prem models for sensitive IP.

-

Signing & traceability: Sign validated artifacts and keep immutable logs of decisions.

-

Safety checks: Enforce an independent validator (rules or model) that scrutinizes patches for risky constructs.

Document these policies and make them part of the onboarding for every relevant team.

How to design validation that prevents regressions

Validation is the single most important control. The better your validation, the safer the automation.

Validation pillars

-

Test coverage: Good unit and integration tests are the bedrock. If the module lacks coverage, invest first.

-

Sanitizers & runtime checks: For native modules, ASAN/UBSAN provide strong safety nets. For managed languages, assertions and strict type checks help.

-

Targeted fuzzing: Use fuzz harnesses that reproduce real crash cases. Run fuzzing both pre- and post-patch for an extended window.

-

Differential testing: Compare outputs between baseline and patched branches for a set of representative inputs to detect unintended divergence.

-

Behavioral contracts: For critical business logic, author contract tests that check invariants (e.g., “payment amount must equal sum of line items”) before accepting a patch.

-

Independent verification: An independent model or static ruleset should flag suspicious patterns (e.g., removal of authentication checks, new unsafe API usage).

Automate as many validation steps as possible and require human signoff for overrides.

Building trust: metrics and KPIs to monitor

Track meaningful metrics to evaluate effectiveness, safety, and ROI.

Suggested KPIs

-

Mean Time To Patch (MTTP): time between detection and a merged fix. Aim to reduce.

-

Validated patch ratio: percentage of generated patches that pass validation and are accepted after review.

-

False positive rate: how often automation proposes changes that are irrelevant or harmful.

-

Regression rate post-merge: incidents that require rollback or hotfix after automated PR merges.

-

Reviewer throughput: number of candidate patches processed per reviewer per week.

-

Coverage improvement: test coverage added by generated tests or by follow-up developer work.

Use dashboards, set thresholds, and define escalation rules for anomalous trends.

Ensuring model and data governance

If your team hosts models or uses third-party models, enforce governance.

Data governance

-

Provenance: track source of training and fine-tuning datasets.

-

Access control: restrict who can feed private code into models.

-

Retention: define retention periods for artifacts (crash dumps, repro cases) and ensure secure deletion policies.

Model governance

-

Versioning: Pin model and pipeline versions in CI to ensure reproducibility.

-

Audit logs: Record all model invocations, prompts, and outputs for future forensics.

-

Testing models: Regularly run a model-sanity suite to detect drift or degradation.

-

Adversarial checks: Periodically test the pipeline with adversarial inputs to check for susceptibility to manipulation.

Governance ensures traceability and reduces risk from model drift or poisoning.

Typical integration patterns and templates

There are two common integration patterns: push model and on-demand model.

Push model

The automation runs continuously or on a schedule, scanning repos and generating candidate PRs for prioritized issues. Useful for high-volume environments but requires strong prioritization to avoid overwhelming reviewers.

On-demand model

Developers or security engineers trigger the agent for specific findings or when a TODO or ticket is created. This model reduces noise and aligns automation with human priorities.

Hybrid approach — use push for high-value modules and on-demand for broader codebases.

Template PR content

Each automated PR should include:

-

Short summary of the root cause.

-

The proposed code changes (diff).

-

Validation artifacts (test results, fuzz logs, sanitizer outputs).

-

Minimal repro case(s).

-

Suggested rollback instructions.

-

Suggested reviewers and relevant labels (e.g.,

auto-generated,security-patch,needs-review).

Standardizing PR content speeds reviewer decisions.

Common pitfalls and how to avoid them

Pitfall: low test coverage

Avoidance: Invest in tests before enabling automation for a repo.

Pitfall: automation fatigue

If too many low-value PRs are generated, reviewers burn out.

Avoidance: Tune prioritization, require prefiltering for severity and exposure, and allow teams to opt out.

Pitfall: over-reliance on models

Treat the agent as an assistant, not an oracle.

Avoidance: Enforce non-mergeable PRs and strict human review.

Pitfall: insufficient sandboxing

Running generated code with production credentials is dangerous.

Avoidance: Use ephemeral environments and deny access to secrets.

Pitfall: missing business context

Patches that pass tests may still violate business logic.

Avoidance: Add contract tests and include product owners in reviews for sensitive domains.

Example case studies (hypothetical but realistic)

Case study 1 — SaaS image processing platform

Problem: Infrequent but severe crashes caused by malformed image uploads; exploit requires crafted images.

Solution: Pilot targeted the image processing microservice. The agent generated defensive checks and swapped an unsafe parser with a safer variant; added unit tests that reproduced crash conditions. Extended fuzzing confirmed the crash was eliminated. Reviewers accepted patch; canary rollout showed no regressions. MTTP improved from days to hours.

Case study 2 — eCommerce platform

Problem: Occasional XSS bugs from inconsistent templating escape usage.

Solution: Agent identified duplicated escaping logic, suggested refactor into centralized helper, and added regression tests. After staged rollout and monitoring, XSS incidents dropped, and developers adopted the centralized helper.

Case study 3 — Enterprise middleware with native libs

Problem: Memory corruption in native compression library used by middleware.

Solution: The agent proposed bounds checks and replaced unsafe calls with a vetted safe API. Sanitizers caught no new issues; fuzzing showed resilience. The patch went upstream with full test artifacts. Team avoided costly security incident.

These case studies illustrate the practical and measurable benefits when validation and governance are strong.

Cost considerations and resource planning

Automation is not free. Budget for:

-

Compute: extended fuzzing and model inference can be expensive. Consider spot instances for fuzzing and GPU availability for model calls.

-

Storage: preserving artifacts and logs requires secure, versioned storage.

-

Engineering time: building harnesses, tests, and maintaining pipelines takes effort.

-

Governance overhead: policy development, audits, and compliance work.

Offset these costs by quantifying time savings in triage and patching, reduction in incident costs, and improved developer productivity.

14. Training and cultural change

Success depends on people:

-

Train reviewers to read automated PRs and validation artifacts.

-

Involve product owners early for business-critical flows.

-

Use generated patches as learning material in post-mortems and brown bag sessions.

-

Promote test writing as a core skill — high testability increases automation safety.

Culture change is gradual — celebrate small wins and share success stories.

Long-term maintenance and continuous improvement

Automation pipelines need maintenance:

-

Tune detection thresholds to reduce noise.

-

Update fuzz harnesses as features change.

-

Re-evaluate model versions periodically and re-run archived findings if a model upgrade occurs.

-

Archive artifacts for a defined period and keep datasets for controlled model fine-tuning if applicable.

-

Audit and compliance reviews on schedule.

Make pipeline upkeep part of standard engineering work, not an afterthought.

Final checklist to get started (one page)

-

Pick a pilot module with tests and known issues.

-

Provision sandboxed CI runners and artifact storage.

-

Add fuzz harnesses and sanitizers where applicable.

-

Define human approval matrix and non-mergeable rules.

-

Set KPIs (MTTP, validated patch ratio, regression rate).

-

Run a short pilot (4–8 weeks), measure, and iterate.

-

Document escalation paths and rollback steps.

-

Plan phased rollout if pilot meets targets.

Closing thoughts

Automated repair agents like CodeMender represent a practical leap in how software is secured and maintained. They promise faster remediation, better use of engineering talent, and stronger products — but they demand mature practices: tests, validation, governance, and cultural buy-in. For software companies that invest in these pillars, CodeMender-style automation is not a silver bullet but a powerful multiplier: it scales expertise, makes triage more efficient, and allows human teams to focus on higher-value tasks.

Start small, validate aggressively, and keep humans firmly in the decision loop. If you do this, you’ll gain the advantages of autonomous patch synthesis while avoiding its pitfalls. That balance is the difference between risky automation and transformative capability.

Recent Posts

- The Foundation Crisis: Why Hiring AI Specialists Before Data Engineers is Setting Companies Up for Failure

- ERP vs CRM vs Custom Platform: What Does Your Business Actually Need?

- The AI Enthusiasm Gap: Bridging Corporate Optimism with Public Skepticism in Enterprise AI Adoption

- The Future of AI-Powered Development: How Cursor Plans to Compete Against Tech Giants

- How Much Does Custom Software Development Cost in 2025? Real Numbers & Breakdown