- Services

- Artificial Intelligence Development

- Deep Learning & Neural Network Development Services

- Professional Machine Learning Development Services

- Enterprise Computer Vision Development Services

- Enterprise Natural Language Processing Development Services

- Chatbot & Conversational AI Development Services for Business

- Enterprise Computer Vision Solutions For Healthcare

- Transformative Healthcare AI Development

- Retail & E-commerce AI Solutions for Personalization & Growth

- AI Integration & MLOps Development Services

- AI Agent Development & Intelligent Automation

- Generative AI Solutions

- Outsourced Product Development

- Custom Software Development

- Software Customization & Integration

- Mobile App Development

- Custom Application Development

- Software Architecture Consulting

- Enterprise Application Development

- AI-Powered Documentation Services

- Product Requirements Document Services

- Artificial Intelligence Development

- Industries

- Healthcare Software Development

- Telemedicine Software Development

- Medical Software Development

- Electronic Medical Records

- EHR Software Development

- Remote Patient Monitoring Software Development

- Healthcare Mobile App Development Services

- Medical Device Software Development

- Healthcare Mobile App Development Services

- Patient Portal Development Services

- Practice Management Software Development

- Healthcare AI/ML Solutions

- Healthcare CRM Development

- Healthcare Data Analytics Solutions Development

- Hospital Management System Development | Custom HMS & Healthcare ERP

- Mental Health Software Development Services

- Medical Billing & RCM Software Development | Custom Healthcare Billing Solutions

- Laboratory Information Management System (LIMS) Development

- Clinical Trial Management Software Development

- Pharmacy Management Software Development

- Finance & Banking Software Development

- Retail & Ecommerce

- Fintech & Trading Software Development

- Online Dating

- eLearning & LMS

- Cloud Consulting Services

- Healthcare Software Development

- Technology

- Products

- About

- Contact Us

2026 Playbook for Software Development — LLMs’ Roadmap for Languages, Skills & AI

We queried several leading LLMs about what software companies must do in 2026 and synthesized their answers into a single practical playbook. Read on for the consensus trends, which programming languages will deliver the most value, the skills teams should prioritize, and a step-by-step approach to safely embed AI into your development lifecycle. Use this guide to align hiring, tooling, and governance for the year ahead.

ChatGPT: 2026 Prognosis for Software Development Companies — what to do, what will trend, and how to use AI well

Short version: 2026 will be the year development teams move from experimenting with AI to operationalizing it across the stack while also wrestling with stronger governance, rising security expectations, and tighter cost/efficiency pressures. Companies that pair pragmatic AI adoption (tooling + guardrails) with investment in cloud-native architectures, observability, and developer skill transitions (TypeScript/Go/Rust + ML-aware Python) will outcompete peers. Below I explain the big trends, the programming languages most likely to matter, what teams should learn, and a step-by-step playbook for using AI in software development — including pitfalls and governance you can’t ignore.

Big-picture trends for 2026 (the context)

-

AI moves from “helpful” to “core infrastructure.” AI tools are already embedded in many dev workflows (code completion, tests, triage); in 2026 expect them to control entire sub-processes (automated PR handling, test generation, release-health monitoring), not just single-line completions. Survey data show adoption is high and daily use is common among professional developers. survey.stackoverflow.co+1

-

Governance, safety and compliance become hard requirements. As teams give AI more authority (automated merges, infra changes), regulators and customers will demand traceability, provenance and risk management. Expect audits for model lineage, data sources, and decision logs to be part of procurement. Emerging protocols and enterprise standards aim to make models auditable. TechRadar

-

Cloud-native and edge patterns accelerate. Architectures emphasizing modular microservices, serverless, and edge compute will be the preferred targets for AI-driven features (low-latency inference, localized personalization). This increases the value of robust CI/CD, observability and automated rollback mechanisms. IBM

-

Security and supply-chain risk rise with AI and dependency growth. AI-generated code, reused model components, and third-party packages need stronger SCA (software composition analysis), secret scanning, and runtime protections. “Vibe coding” (fast AI-assisted assembly) raises speed but also risk unless paired with controls. WIRED

What programming languages will matter in 2026

1. Python — still essential (AI, data, automation).

Python remains the lingua franca for ML, data pipelines, automation scripts and many backend services; it continues to lead GitHub activity in recent reports. For any team doing AI/ML product work, Python remains indispensable. The GitHub Blog

2. JavaScript / TypeScript — front-end + edge logic.

TypeScript continues to dominate client-side and serverless Node.js work: it’s the de facto language for product-facing features, edge functions, and increasingly for tooling around observability and developer UX. StackOverflow usage stats still show wide adoption. survey.stackoverflow.co

3. Go — cloud services, infra and performance.

Go is the pragmatic choice for cloud-native services, gateways, and tools that must be lightweight, concurrent, and easy to deploy. Expect continued use in developer platforms, CI/CD agents, and service meshes.

4. Rust — systems & security-sensitive components.

Rust’s adoption keeps growing where memory-safety and performance matter: cryptography, low-level agents, and high-throughput services. Teams building high-assurance modules or replacing C/C++ components will favor Rust. Stack surveys continue to show high “love” for Rust. survey.stackoverflow.co

5. Java / C# — enterprise backbone.

Large enterprises and regulated industries retain significant Java and .NET codebases. 2026 is about integrating AI into these stacks (APIs, model-serving, feature stores) rather than wholesale rewriting.

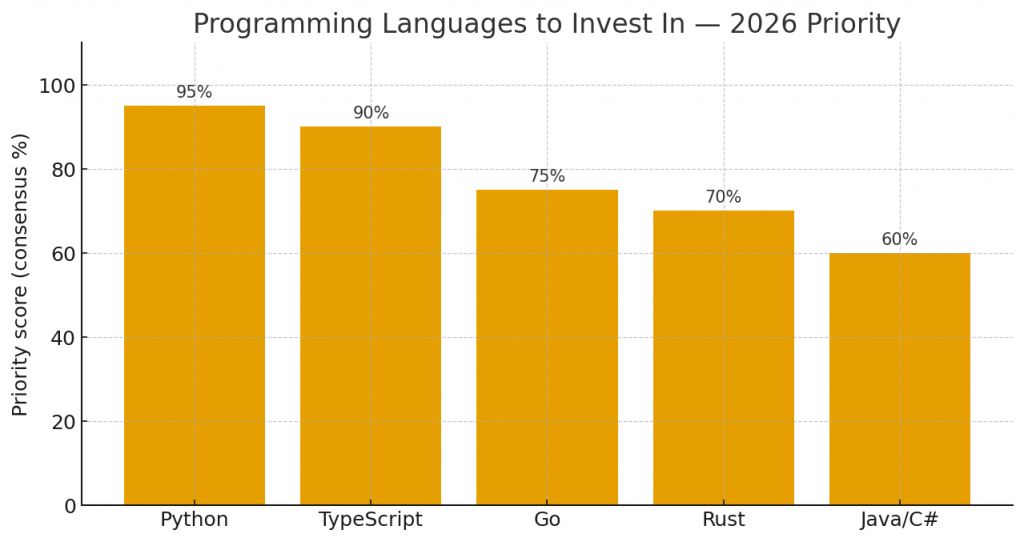

Quick takeaway on languages: invest in Python + TypeScript for the fastest ROI (AI + product), keep Go/Rust skills for infrastructure and security-critical modules, and maintain Java/C# expertise where the business still runs monoliths.

What dev teams should learn in 2026 (skills roadmap)

-

AI-first engineering skills

-

Prompt engineering is table stakes, but go beyond prompts: learn chain-of-thought design, prompt testing, and prompt-decomposition patterns.

-

Learn how to evaluate model outputs: bias checks, hallucination tests, and automated verification against a gold dataset.

-

-

ML-Ops and model lifecycle

-

Model packaging, versioning, drift detection, retraining pipelines, and deployment (online vs batch). Adopt model registries and reproducible packaging (emerging Model Context Protocols are a helpful pattern). TechRadar

-

-

Secure software supply-chain

-

Dependency management, SBOMs, SCA tools, and secrets management. AI introduces new untrusted inputs — treat model artifacts like binaries.

-

-

Observability & Site Reliability for AI

-

Track ML-specific metrics (feature distributions, inference latency, error drift, human override rates) alongside classic SRE metrics.

-

-

Cloud-native patterns

-

Serverless, sidecars for inference, fast CI/CD, and infra as code. Devs must be comfortable with containerization + function-as-a-service workflows.

-

-

Soft skills

-

Product judgment, AI-ethics literacy, and change management. Teams that balance engineering speed with governance win.

-

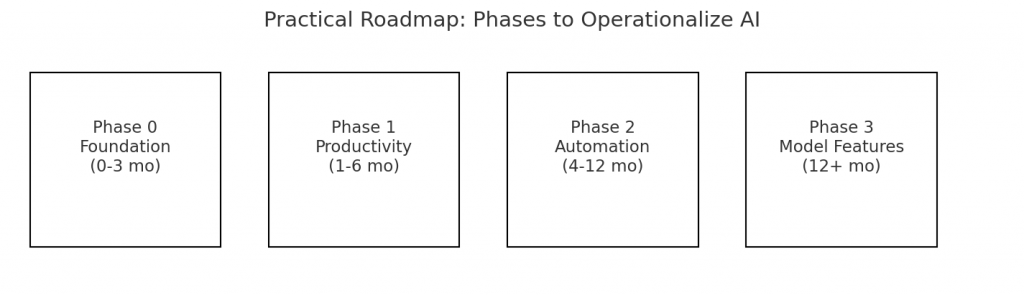

How to use AI in software development — practical playbook

Phase 0 — Foundation (months 0–3)

-

Inventory: map where code is written, which tools are used, and where human-in-the-loop matters.

-

Governance: create a model-use policy (approved models, data rules, logging requirements).

-

Tooling baseline: add SCA, secret scanning and a WAF; enable code review guardrails for AI-suggested patches.

Phase 1 — Productivity (months 1–6)

-

Adopt CI-integrated AI assistants: code completion (Copilot-like), test generation and unit-test scaffolding. Ensure all AI-suggested commits require human approval and are tagged in commit messages for provenance. StackOverflow/industry surveys show daily usage rates rising—use that momentum but require human review. survey.stackoverflow.co

-

Use AI to generate test cases, fuzz harnesses, and performance-stress templates — automating these improves coverage fast.

Phase 2 — Automation (months 4–12)

-

Start automated PR triage and low-risk auto-merge for trivial fixes (typos, docs, dependency bumps) with human fallback paths.

-

Automate release-health checks: use models to summarize failing CI runs, map flaky tests, or suggest remediation steps.

Phase 3 — Model-enabled features (12+ months)

-

Ship customer-facing AI features with A/B testing and clear rollback. Instrument user-facing models with monitoring for drift, bias metrics and user feedback loops.

-

For mission-critical automation (auto deployment, infra changes), enforce strong human-in-the-loop gating and audit logs.

Governance & safety — non-negotiables

-

Traceability: every AI-influenced code change, generated artifact, or model decision must have metadata: model version, prompt, data snapshot, and author. This is essential for audits and incident analysis. TechRadar

-

Provenance & SBOMs for models: treat model files like artifacts — track their lineage, training data characteristics (at a high level), and dependency bills-of-materials.

-

Testing for hallucinations & bias: include negative tests and robustness suites into CI; measure model outputs against golden datasets before production.

-

Responsible AI team: create a cross-functional committee (engineering, legal, product, security) to oversee high-risk uses and approvals.

Security considerations (practical)

-

Scan generated code. Any AI-produced code must pass existing security gates: static analysis, SAST, dependency checks, and manual review focusing on injection, insecure defaults and secrets leaks.

-

Limit model data exposure. Don’t send secrets or PII to public APIs. Use on-prem / private inference for sensitive code and data.

-

Monitor for malicious patterns. Attackers will use AI to craft code-based attacks; monitor for unusual behavior, new usage patterns and anomalous commits.

Tooling & platform recommendations

-

Model orchestration: adopt a model registry (MLflow or cloud vendor equivalents) and automate retraining pipelines. Consider MCP-style packaging for reproducibility. TechRadar

-

Developer UX: integrate AI into IDEs, PR bots and ticketing so suggestions land where devs work.

-

Observability: expand APM/SRE to include ML telemetry — inference times, confidence, human override rates.

What NOT to do (common pitfalls)

-

Don’t blindly accept AI-generated changes into production without human review. Surveys show widespread AI use but also show organizations reporting losses when governance is weak. Short-term speed without controls is risky. Reuters

-

Don’t expose secrets in prompts or use unvetted public models for sensitive domains.

-

Avoid treating AI as a headcount multiplier without investing in training and change management.

Quick roadmap for engineering leaders (90-day plan)

Week 1–2: Inventory dependencies, enable secret scanning + SCA, and mandate MFA.

Week 3–6: Roll out AI-assistants to a pilot group with enforced commit tagging and SAST gates.

Week 7–12: Build model-use policy, set up a model registry and logging of AI-derived artifacts.

Month 3+: Automate low-risk flows, expand to feature experiments, and iterate on governance.

Final prognosis

2026 will reward software companies that pair AI-driven developer productivity with enterprise-grade governance. The winners will not be those who “use the fanciest models” but those who integrate AI thoughtfully: automated testing, model lifecycle control, and robust security/supply-chain practices. Languages like Python and TypeScript will remain the fastest routes to deliver AI-powered products; Go and Rust will be crucial where performance and safety matter. Teams that invest early in ML-ops, observability, and reskilling will capture disproportionate value — while those that chase short-term velocity without controls will quickly pay the price.

DeepSeek: The 2026 Software Development Landscape: A Strategic Guide for Companies

The software development industry is not just evolving; it’s undergoing a fundamental metamorphosis. The approaches that brought success in the early 2020s are rapidly becoming obsolete. For software development companies looking to not just survive but thrive in 2026, the time to adapt is now.

The core differentiator will no longer be just writing code, but intelligently integrating, securing, and leveraging AI to solve complex business problems. Here’s your strategic guide to preparing for 2026.

The Macro-Shift: From Code-Centric to AI-Augmented Development

The biggest trend is the maturation of AI from a buzzword to an integral part of the software development lifecycle (SDLC). AI won’t replace developers, but companies that use AI will inevitably replace those that don’t. The focus will shift from pure programming to:

-

Prompt Engineering & Model Orchestration: The ability to effectively instruct and chain together AI models (e.g., using OpenAI’s GPT, Anthropic’s Claude, and open-source models like Llama) will become a core skill.

-

AI-Powered Development Environments: Tools like GitHub Copilot, Cursor, and Amazon CodeWhisperer will become the default, acting as a real-time pair programmer that handles boilerplate, suggests entire functions, and debugs code.

-

Generative AI for Broader Tasks: AI will be used to generate not just code, but also tests, documentation, architecture diagrams, and even product requirement documents from natural language descriptions.

Key Trends Shaping 2026

-

The Rise of Platform Engineering & Internal Developer Platforms (IDPs):

-

What it is: A dedicated team that builds and maintains a curated, self-service platform for developers within the company. This platform standardizes tools, automates infrastructure, and provides “golden paths” for deploying applications.

-

Why it Matters: It drastically improves developer productivity and experience by abstracting away the complexity of Kubernetes, cloud services, and CI/CD pipelines. By 2026, top companies will compete on the quality of their internal platforms.

-

-

AI Integration as a Standard Feature:

-

What it is: Nearly every new software product, from enterprise CRMs to consumer fitness apps, will have some form of AI/ML capability. This could be a smart recommendation engine, a natural language search interface, or automated content summarization.

-

Why it Matters: “AI-powered” will become a table-stakes expectation, not a differentiator. Companies must build the competency to seamlessly integrate these features.

-

-

The Shift-Left on Security & AI Safety (DevSecOps & AISecOps):

-

What it is: Security is no longer a final step but is integrated from the very beginning of the SDLC. With AI, this expands to “AISecOps”—specifically testing for model bias, ensuring data privacy in training sets, and preventing prompt injection attacks.

-

Why it Matters: The cost of a data breach or a biased AI model is catastrophic. Proactive security will be a non-negotiable part of the development process and a major selling point for clients.

-

-

WebAssembly (WASM) Goes Mainstream:

-

What it is: A binary instruction format that allows code written in multiple languages (like Rust, C++, Go) to run at near-native speed in a web browser and on servers.

-

Why it Matters: WASM enables complex applications like photo editors, CAD software, and high-performance games to run seamlessly in the browser. It also promises a future of truly portable, language-agnostic software components.

-

Programming Languages: The 2026 Power Rankings

The language landscape is being reshaped by the demands of performance, safety, and AI.

-

Python: The undisputed king for AI, Machine Learning, and data science. Its vast ecosystem (TensorFlow, PyTorch, Scikit-learn) and simplicity will keep it at the top for backend and analytical workloads.

-

JavaScript/TypeScript: The bedrock of web development. TypeScript will be the de facto standard for any serious front-end or full-stack development due to its type safety and enhanced developer experience.

-

Rust: The rising star. Valued for its memory safety, performance, and concurrency features, Rust is perfect for system-level programming, WebAssembly, and building critical, secure infrastructure. It’s being adopted by Linux, Windows, and major web browsers.

-

Go (Golang): Beloved for its simplicity, performance, and built-in concurrency model. It remains the go-to language for building scalable network services, APIs, and cloud-native infrastructure and tools (Docker, Kubernetes are written in Go).

-

Java & C#: The enterprise stalwarts. They are not going away. They continue to power massive, mission-critical systems worldwide and are modernizing with faster release cycles and better performance.

What to Learn: Developers should be proficient in Python for AI tasks, strong in TypeScript for the web, and consider learning Rust or Go for high-performance backend systems.

Strategic Imperatives: What Your Company Should Do Now

-

Invest Heavily in AI Fluency:

-

For Developers: Train your teams on using AI coding assistants effectively. Encourage experimentation with different AI models via APIs (OpenAI, Anthropic, Mistral).

-

For Leadership: Run workshops to identify where AI can create the most value in your products and internal processes. Start small with a pilot project.

-

-

Build a “Platform Mindset”:

-

Assess your current development workflow. Where are the bottlenecks? Start building a centralized, self-service platform that automates provisioning, deployment, and monitoring. This is a long-term investment that pays massive dividends in productivity.

-

-

Double Down on Security & Ethics:

-

Integrate security scanning tools (SAST, DAST) directly into your CI/CD pipeline.

-

Develop a formal policy for AI ethics and safety, covering data sourcing, model testing, and output monitoring.

-

-

Embrace the Polyglot Reality:

-

Stop trying to standardize on one language. Build teams that can leverage the right tool for the job: Python for data/ML, TypeScript for the front-end, and Rust/Go for performance-critical backends.

-

-

Cultivate “T-Shaped” Talent:

-

Hire and develop developers who have deep expertise in one area (the vertical bar of the T) but also possess broad knowledge across the stack, including AI, security, and DevOps (the horizontal bar). This fosters flexibility and better collaboration.

-

How to Use AI in Software Development: A Practical Framework

Integrate AI across the entire SDLC:

-

Planning & Design: Use generative AI (e.g., ChatGPT-4, Claude 3) to brainstorm user stories, generate initial architecture diagrams, and identify potential edge cases.

-

Coding: Mandate the use of AI pair programmers like GitHub Copilot. They reduce boilerplate, speed up development, and help developers discover new libraries and APIs.

-

Testing: Use AI to automatically generate unit and integration tests. AI can also analyze code to identify areas with a high probability of bugs.

-

Debugging & Optimization: Paste error logs and code snippets into AI chatbots for instant root-cause analysis and solution suggestions. Use AI-powered profilers to find performance bottlenecks.

-

Documentation & Maintenance: Automatically generate and update documentation from code and commit messages. Use AI to analyze legacy codebases and suggest refactoring strategies.

Conclusion: The Winning Formula for 2026

The software development company of 2026 will be defined by its strategic embrace of AI, its investment in developer productivity through platform engineering, and its unwavering commitment to security and ethical practices. The winners will be those who see AI not as a threat, but as the most powerful tool ever added to the developer’s toolkit.

The future is not just about writing code; it’s about architecting intelligent systems. Start building that capability today.

Claude Ai: Software Development in 2026: A Strategic Roadmap for Companies

As we approach 2026, software development companies face a landscape transformed by artificial intelligence, evolving architectural patterns, and shifting market demands. This article explores the key trends, technologies, and strategies that will define success in the coming year.

The AI-First Development Paradigm

The most significant shift in 2026 will be the complete integration of AI into every stage of software development. Companies that treat AI as optional will fall behind those who embed it into their core workflows.

AI-Assisted Development Tools

AI coding assistants have evolved beyond simple autocomplete. Modern AI tools now handle entire features, conduct code reviews, write comprehensive tests, and refactor legacy systems. Developers who learn to effectively collaborate with these tools will be 3-5x more productive than those who resist adoption.

What companies should do:

- Invest in enterprise AI coding platforms and train developers on prompt engineering for code generation

- Establish clear guidelines for AI-generated code review and testing standards

- Create hybrid workflows where AI handles boilerplate while developers focus on architecture and business logic

- Build internal knowledge bases that AI tools can reference for company-specific patterns

AI in Product Development

Beyond coding, AI will drive product decisions through advanced analytics, user behavior prediction, and automated A/B testing. Companies should integrate AI into their product management processes to identify features users want before they articulate them.

Programming Languages: The 2026 Rankings

The Dominant Forces

Python continues its reign as the most versatile language, strengthened by its dominance in AI/ML, data science, and backend development. Every software company should have strong Python expertise.

TypeScript has become the de facto standard for web development, with JavaScript largely relegated to legacy maintenance. Its type safety and tooling ecosystem make it essential for modern frontend and full-stack development.

Rust enters the mainstream for systems programming, cloud infrastructure, and performance-critical applications. Companies building high-performance services or replacing C/C++ systems should invest in Rust talent now.

Go remains the language of choice for cloud-native applications, microservices, and DevOps tooling. Its simplicity and performance make it ideal for backend services at scale.

Rising Stars

Zig gains traction as a simpler alternative to Rust for systems programming, while Mojo emerges as a potential Python successor for AI workloads, offering Python-like syntax with C-level performance.

What to Deprioritize

Traditional enterprise languages like Java and C# remain stable but aren’t where innovation happens. Ruby on Rails continues its slow decline. Companies should maintain but not expand these skill sets.

Architectural Trends for 2026

Edge Computing and Distributed Systems

The shift toward edge computing accelerates as applications move closer to users. Companies should design for distributed-first architectures where compute happens at the edge, with cloud serving as coordination rather than execution.

Key skills needed:

- Distributed systems design and consistency models

- Edge runtime platforms (Cloudflare Workers, AWS Lambda@Edge, Deno Deploy)

- Event-driven architectures and message streaming

- Data synchronization and conflict resolution

Platform Engineering

The “Platform as a Product” movement matures in 2026. Companies that build internal developer platforms (IDPs) to abstract infrastructure complexity will ship features faster and more reliably.

Successful platform teams create self-service capabilities for developers while maintaining security, compliance, and cost controls. This requires dedicated platform engineering roles separate from traditional DevOps.

Micro-Frontends and Modular Architectures

Frontend architectures mirror backend microservices patterns. Teams independently deploy interface components while maintaining cohesive user experiences. This enables larger teams to work in parallel without blocking each other.

Essential Skills for Development Teams

AI Collaboration and Prompt Engineering

Developers must learn to work with AI rather than compete against it. This means:

- Writing clear specifications that AI can translate into code

- Reviewing and refining AI-generated solutions effectively

- Understanding when to use AI assistance versus handcrafted solutions

- Training custom models on company codebases for domain-specific assistance

Full-Stack Cloud Native Development

The distinction between frontend, backend, and infrastructure engineers blurs. Modern developers need end-to-end understanding of cloud platforms, serverless architectures, and infrastructure-as-code.

Priority learning areas:

- Serverless and edge computing platforms

- Container orchestration (Kubernetes remains dominant)

- Infrastructure as Code (Terraform, Pulumi)

- Observability and distributed tracing

- Security-first development practices

Data Engineering Fundamentals

Every developer needs basic data engineering skills as applications become increasingly data-intensive. Understanding data pipelines, streaming systems, and analytics integrations moves from specialized knowledge to baseline competency.

Security in an AI-Augmented World

AI-generated code introduces new security challenges. Vulnerabilities can propagate across projects when developers blindly accept AI suggestions. Companies need:

- Automated security scanning integrated into AI coding workflows

- Training on reviewing AI-generated code for security issues

- Zero-trust architectures as standard practice

- Supply chain security for both traditional dependencies and AI models

The Economics of Software Development in 2026

Productivity Multipliers

AI enables smaller teams to build more sophisticated products. A well-organized team of 10 developers with AI assistance can match the output of 30 traditional developers. This creates pressure to restructure teams around fewer, more senior engineers.

The Premium on Architecture Skills

As AI handles implementation, architectural decision-making becomes the primary differentiator. Senior engineers who excel at system design, technology selection, and technical strategy will command premium compensation.

Open Source’s Evolution

Open source remains critical, but economic models shift toward dual licensing and hosted services. Companies should contribute to ecosystems they depend on while building sustainable business models around open source.

Actionable Strategy for 2026

Immediate Priorities (Q1 2026)

- Audit AI readiness: Evaluate current AI tool adoption and identify gaps

- Upskill leadership: Ensure technical leaders understand AI capabilities and limitations

- Modernize languages: Begin transitioning teams toward TypeScript, Rust, or Go if still primarily using legacy languages

- Establish platform teams: Create dedicated teams for internal developer experience

Medium-Term Goals (2026)

- Build AI-native workflows: Redesign development processes around AI collaboration

- Invest in edge capabilities: Develop expertise in edge computing and distributed systems

- Create learning programs: Structured training for AI-assisted development and modern architectures

- Rethink team structures: Optimize for smaller, more autonomous teams with broader skill sets

Long-Term Vision (Beyond 2026)

Position for a future where:

- AI handles most routine development tasks

- Human developers focus on creativity, architecture, and product strategy

- Development teams operate as force multipliers through effective AI collaboration

- Software quality and security improve through AI-assisted analysis

Conclusion

2026 will be remembered as the year software development fully transformed into an AI-augmented discipline. Companies that embrace this shift—investing in the right languages, architectures, and skills—will thrive. Those that resist will struggle to compete on speed, quality, and innovation.

The winners won’t be those with the most developers, but those who most effectively combine human creativity with AI capabilities. The question isn’t whether to adopt AI in software development, but how quickly and thoroughly you can integrate it into your culture and workflows.

Start preparing now. The future arrives quickly in our industry, and 2026 is already on the horizon.

Mistral Ai: Software Development in 2026: Trends, Strategies, and the AI Revolution

The software development landscape is evolving at breakneck speed. By 2026, companies that fail to adapt to new technologies, methodologies, and market demands risk falling behind. This article outlines the key trends, programming languages, and AI-driven strategies that will define success for software development companies in the coming year.

1. Key Trends Shaping Software Development in 2026

A. AI-Augmented Development

- AI as a Co-Pilot: AI tools will not replace developers but will augment their workflows—automating boilerplate code, suggesting optimizations, and even generating entire modules based on natural language prompts.

- AI-Driven Testing and Debugging: AI will identify bugs, suggest fixes, and optimize performance, reducing time-to-market and improving software quality.

- Personalized Development Environments: AI will tailor IDEs and workflows to individual developers’ habits and project requirements.

B. Low-Code/No-Code Expansion

- Enterprise Adoption: Large organizations will increasingly use low-code platforms for internal tools, rapid prototyping, and business process automation.

- Developer Collaboration: Professional developers will integrate low-code solutions into their workflows, using them for UI/UX design, workflow automation, and legacy system modernization.

C. Edge Computing and IoT Proliferation

- Decentralized Processing: With the rise of IoT devices, software will need to run efficiently on edge devices, requiring optimized, lightweight code and new security paradigms.

- Real-Time Data Processing: Applications in healthcare, manufacturing, and smart cities will demand real-time analytics and decision-making at the edge.

D. Sustainability and Green Coding

- Energy-Efficient Code: Companies will prioritize writing code that consumes less energy, both for cost savings and to meet regulatory and consumer demands for sustainability.

- Carbon-Aware Development: Tools will emerge to measure and optimize the carbon footprint of software applications.

2. Programming Languages to Watch in 2026

Emerging Contenders:

- Zig and Nim for systems programming and performance-critical applications.

- Elixir for scalable, fault-tolerant distributed systems.

3. What Software Development Companies Should Do in 2026

A. Embrace AI and Automation

- Integrate AI Tools: Adopt AI-powered IDEs (like GitHub Copilot, Amazon CodeWhisperer), automated testing, and AI-driven DevOps pipelines.

- Upskill Teams: Train developers in AI/ML basics, prompt engineering, and ethical AI use.

- Build AI-First Products: Develop applications with embedded AI features, such as predictive analytics, natural language interfaces, and adaptive UIs.

B. Focus on Security and Compliance

- Shift Left Security: Integrate security testing and compliance checks early in the development lifecycle.

- Zero Trust Architecture: Implement robust authentication, encryption, and access controls, especially for cloud and edge applications.

C. Adopt Modern Architectures

- Microservices and Serverless: Continue the shift toward modular, scalable architectures.

- Event-Driven Design: Use event sourcing and CQRS for real-time, data-intensive applications.

D. Prioritize Developer Experience (DevEx)

- Streamline Workflows: Reduce friction with better tooling, documentation, and collaboration platforms.

- Foster Innovation: Encourage experimentation with hackathons, R&D labs, and cross-functional teams.

4. How to Use AI in Software Development

A. AI for Code Generation and Review

- Use AI to generate boilerplate code, API stubs, and documentation.

- Leverage AI for real-time code reviews, style suggestions, and vulnerability detection.

B. AI for Testing and QA

- Automate test case generation, execution, and analysis.

- Use AI to predict and prevent bugs before they reach production.

C. AI for Project Management

- AI-driven project management tools will optimize sprint planning, resource allocation, and risk assessment.

D. AI for Personalization and User Experience

- Build applications that adapt to user behavior, preferences, and context using AI/ML models.

5. Skills Developers Should Learn in 2026

- AI/ML Fundamentals: Understanding how to build, train, and deploy models.

- Prompt Engineering: Crafting effective prompts for AI tools and APIs.

- Cloud-Native Development: Mastery of Kubernetes, serverless, and multi-cloud strategies.

- Security Best Practices: Secure coding, threat modeling, and compliance (GDPR, CCPA, etc.).

- Sustainable Coding: Writing energy-efficient, maintainable, and scalable code.

Conclusion: The Future is AI-Augmented and Developer-Centric

In 2026, software development companies that thrive will be those that:

- Harness AI to augment their teams and products.

- Stay agile with modern architectures and languages.

- Prioritize security, sustainability, and developer experience.

The future of software development is not about humans vs. machines, but about humans and machines working together to build better, faster, and more innovative solutions.

Final Conclusion

Across four leading LLM forecasts, a clear picture emerges for 2026: AI will be deeply embedded in development workflows and product features, platform engineering will determine who scales fastest, and security/governance will no longer be optional overhead but a business imperative. This article distills the models’ consensus and divergences into a practical roadmap—covering language priorities (Python, TypeScript, Go, Rust), essential reskilling, ML-Ops and observability, and concrete short- and medium-term actions your engineering leaders should take to capture AI’s upside without inviting catastrophic risk.

What they all agreed on (100% — 4/4)

These are the core signals every model called out — treat them as high-confidence inputs for planning.

-

AI becomes core infrastructure, not optional.

All models expect AI deeply embedded in the SDLC, product features, and developer workflows. -

Governance, safety & regulation rise in importance.

Every model warns that regulation, traceability and auditability will be required as AI is operationalized. -

Cloud-native & platform engineering accelerate.

Microservices, serverless/edge, internal developer platforms and automated CI/CD are central enablers. -

Security & supply-chain risk increase.

Models emphasize software composition, model provenance, secret management and “shift left” security. -

Climate and geopolitical friction matter to strategy.

Climate impacts, energy resilience and geopolitical risk (U.S.–China, Russia–Ukraine hotspots) are repeated themes affecting supply chains and costs. -

Reskilling is essential.

All ask companies to invest in new skills (prompt engineering, ML-Ops, observability, cloud-native practices). -

Consensus languages to prioritize.

Every model names the same core language set companies should invest in: Python, TypeScript, Go, Rust, plus maintenance of Java/C# stacks.

Strong-but-not-universal themes (75% — 3/4)

Important trends most models emphasized, but not every single one.

-

Platform engineering / IDPs as a competitive advantage (3/4). DeepSeek, Claude and Mistral highlight internal platforms and “golden paths.” ChatGPT also points to cloud-native patterns (so this is effectively near-universal in practice).

-

Edge / WebAssembly / IoT demand (3/4). DeepSeek and Mistral are loudest on WASM and edge; Claude also discussed distributed/edge-like patterns.

-

Low-code / no-code expansion (3/4). Mistral and Claude call out low-code uptake; DeepSeek mentions rapid prototyping and platformization which implies it; ChatGPT is quieter here.

-

Focus on developer experience & DevEx (3/4). Multiple models stress IDE integration, AI pair-programmers and DX investments.

Where they diverged (major differences in emphasis or scope)

These show the useful nuance — different risk appetites, thematic focus and suggested priorities.

-

Tone and emphasis:

-

ChatGPT: Practical, governance-first, stepwise playbook (focus on ML-Ops, testing, traceability).

-

DeepSeek: Strategic, systems-level framing (“Great Adaptation”), strong on platform engineering, WASM, and policy/regional dynamics.

-

Claude: Broad, encyclopedic — includes niche tail risks (quantum crypto pressure, space, health system details) and more granular policy/regulatory consequences.

-

Mistral: Geopolitics- and security-first — “new cold war” framing, social movements, mental-health and sustainability emphasis, also low-code & edge.

-

-

Tail risks & exotic tech:

-

Claude included the most “long-tail” items (quantum, space, health specifics).

-

Mistral focused more on social/political friction and rapid geostrategic decoupling.

-

DeepSeek emphasized adaptation, finance/“loss & damage” climate politics and WASM.

-

ChatGPT stayed focused on governance, pragmatic tooling and developer process.

-

-

Product vs infra focus:

-

DeepSeek & Mistral skew more to product / market strategy and geopolitical-commercial consequences.

-

ChatGPT & Claude provide more operational detail for engineering teams (ML-Ops, telemetry, testing).

-

Quantified takeaways (simple stats)

-

100% (4/4): AI integration; governance & regulation; cloud-native/platform engineering; security/supply-chain risk; reskilling; languages (Python, TypeScript, Go, Rust, Java/C#).

-

75% (3/4): Edge/WASM interest; platform engineering/IDP as a competitive advantage; DX/IDE + AI-assistants; low-code trend.

-

50% (2/4): Emphasis on extreme tail-risk tech (quantum/space) — these are noteworthy but lower-probability planning items.

-

Tone split: 50% of the models center operational playbooks (ChatGPT + Claude), 50% center strategy/political framing (DeepSeek + Mistral).

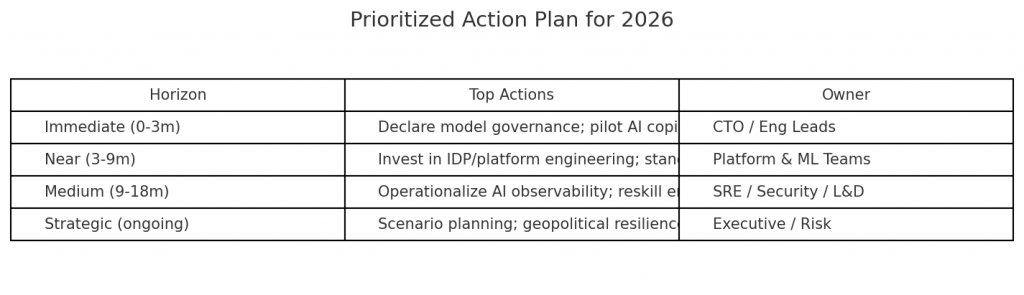

Practical conclusions — what companies must do (prioritized)

Immediate (0–3 months)

-

Declare model governance basics: approved models, data rules, logging, and enforced provenance metadata on any AI-derived artifact.

-

Train a pilot group: roll out AI copilots to a small team with strict review, SAST, and SCA gates.

-

Inventory critical assets: codebases, third-party libs, model artifacts, infra (which services are edge vs cloud), and sensitive data flows.

Near term (3–9 months)

4. Invest in Platform Engineering / IDP: automate common workflows, containerize standard runtimes, and build golden paths for secure, repeatable deployments.

5. Stand up ML-Ops basics: model registry, versioning, drift detection, CI for models, retraining pipelines.

6. Integrate security at the point of AI use: SAST on generated code, SBOMs for models & dependencies, secret-scanning on prompts.

Medium term (9–18 months)

7. Operationalize observability for AI: track inference latency, confidence, distribution drift, human override rates and MTTR/MTTD for model incidents.

8. Reskill broadly: Python & ML, TypeScript & front/edge, Go/Rust for infra, plus ML-Ops, prompt engineering and AISecOps.

9. Embed human-in-loop for high-risk actions: auto-merge only low-risk PRs, require sign-off for infra changes and customer-facing model changes.

Strategic (ongoing)

10. Scenario plans & geopolitical resilience: map supplier/geography risk, energy constraints (for heavy inference), and rehearse incident playbooks for model/data breaches or regulatory audits.

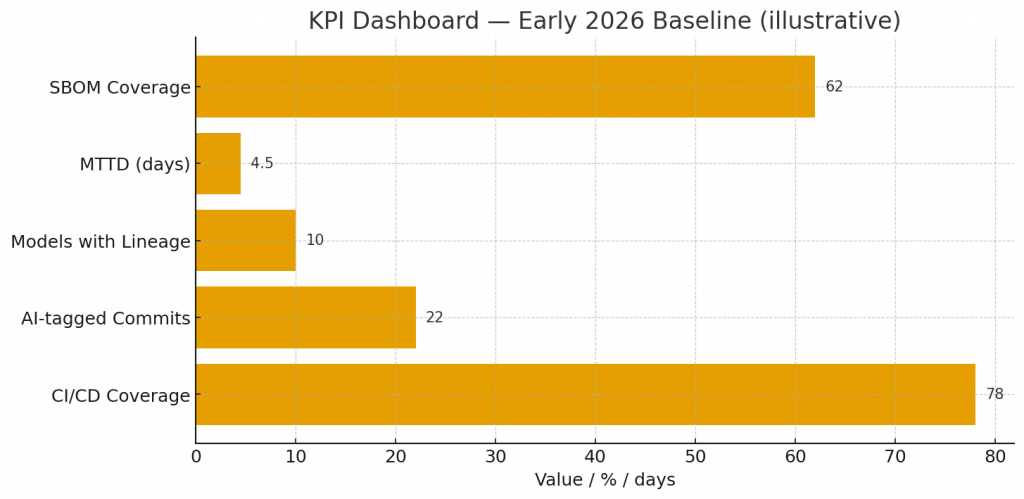

Recommended KPIs to track progress (dashboard-ready)

-

% of services behind CI/CD automated pipelines (target 90%+)

-

% of code changes where AI suggested content is tagged and audited

-

Number of models in registry / % with lineage metadata

-

MTTD / MTTR for model-related incidents (target reduce by 50% in 12 months)

-

% of engineering staff certified in ML-Ops/AI governance (target 30–50% in 12 months)

-

SBOM coverage % and number of critical SCA alerts per month (trend to zero)

-

Time-to-rollout for platform “golden path” features (mean lead time)

Short synthesized prognosis

-

Most likely (baseline): 2026 will be one of operationalization — AI becomes routine across development and product, accompanied by stricter governance requirements and localized shocks (climate/geopolitical) that favor resilient, platform-led companies.

-

Stress scenario: A major geo-political shock, systemic AI-safety incident, or critical cloud/provider outage forces emergency decoupling, sharp cost pressures, and accelerated regulation — companies with strong governance and platform engineering will weather it best.

Final single-sentence recommendation

Treat 2026 as the year to make AI production-safe — invest in platform engineering, ML-ops, rigorous governance, security-by-default, and reskilling; speed without these controls is a liability, not an advantage.

Recent Posts

- The Foundation Crisis: Why Hiring AI Specialists Before Data Engineers is Setting Companies Up for Failure

- ERP vs CRM vs Custom Platform: What Does Your Business Actually Need?

- The AI Enthusiasm Gap: Bridging Corporate Optimism with Public Skepticism in Enterprise AI Adoption

- The Future of AI-Powered Development: How Cursor Plans to Compete Against Tech Giants

- How Much Does Custom Software Development Cost in 2025? Real Numbers & Breakdown