- Services

- Artificial Intelligence Development

- Deep Learning & Neural Network Development Services

- Professional Machine Learning Development Services

- Enterprise Computer Vision Development Services

- Enterprise Natural Language Processing Development Services

- Chatbot & Conversational AI Development Services for Business

- Enterprise Computer Vision Solutions For Healthcare

- Transformative Healthcare AI Development

- Retail & E-commerce AI Solutions for Personalization & Growth

- AI Integration & MLOps Development Services

- AI Agent Development & Intelligent Automation

- Generative AI Solutions

- Outsourced Product Development

- Custom Software Development

- Software Customization & Integration

- Mobile App Development

- Custom Application Development

- Software Architecture Consulting

- Enterprise Application Development

- AI-Powered Documentation Services

- Product Requirements Document Services

- Artificial Intelligence Development

- Industries

- Healthcare Software Development

- Telemedicine Software Development

- Medical Software Development

- Electronic Medical Records

- EHR Software Development

- Remote Patient Monitoring Software Development

- Healthcare Mobile App Development Services

- Medical Device Software Development

- Healthcare Mobile App Development Services

- Patient Portal Development Services

- Practice Management Software Development

- Healthcare AI/ML Solutions

- Healthcare CRM Development

- Healthcare Data Analytics Solutions Development

- Hospital Management System Development | Custom HMS & Healthcare ERP

- Mental Health Software Development Services

- Medical Billing & RCM Software Development | Custom Healthcare Billing Solutions

- Laboratory Information Management System (LIMS) Development

- Clinical Trial Management Software Development

- Pharmacy Management Software Development

- Finance & Banking Software Development

- Retail & Ecommerce

- Fintech & Trading Software Development

- Online Dating

- eLearning & LMS

- Cloud Consulting Services

- Healthcare Software Development

- Technology

- Products

- About

- Contact Us

The AI Enthusiasm Gap: Bridging Corporate Optimism with Public Skepticism in Enterprise AI Adoption

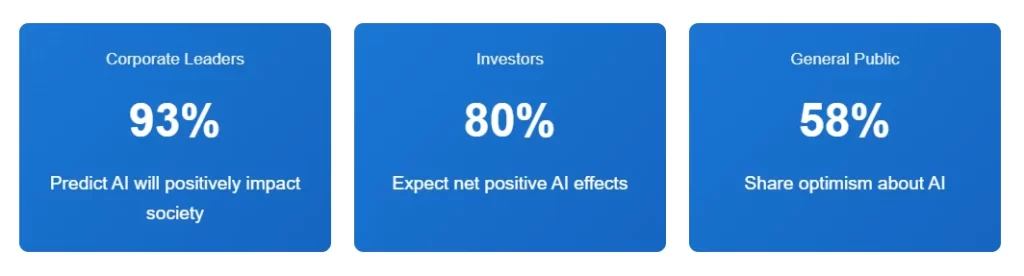

Recent research reveals a significant perception gap between corporate leaders, investors, and the general public regarding artificial intelligence’s impact on society. While 93% of business leaders and 80% of investors predict net positive outcomes, only 58% of the public shares this optimism. This divergence presents both strategic challenges and opportunities for enterprises navigating AI transformation, particularly in areas of sustainability, workforce development, and responsible AI deployment.

Understanding the AI Perception Divide: Key Research Findings

The Just Capital research study represents one of the most comprehensive analyses of AI perception across different stakeholder groups. By surveying corporate leaders, institutional investors, and members of the general public, the research illuminates critical disconnects that enterprise technology leaders must understand and address as they develop AI strategies.

This perception gap is not merely an academic curiosity; it has profound implications for how organizations approach AI adoption, communicate with stakeholders, allocate resources, and manage risks. Companies that successfully bridge this divide will be better positioned to realize AI’s benefits while maintaining trust with employees, customers, and communities.

The Optimism Hierarchy: Analyzing Stakeholder Perspectives

| Stakeholder Group | AI Optimism Level | Primary Concerns | Key Expectations |

|---|---|---|---|

| Corporate Leaders | 93% positive outlook | Disinformation, malicious AI use, competitive pressure | Productivity gains, innovation acceleration, profitability growth |

| Institutional Investors | 80% positive outlook | Environmental sustainability, worker displacement, long-term ROI | Shareholder returns, responsible deployment, competitive advantage |

| General Public | 58% positive outlook | Job losses, environmental impact, loss of human control, privacy | Job security, ethical AI use, environmental responsibility |

The 35-percentage-point gap between corporate leadership enthusiasm (93%) and public confidence (58%) represents a significant trust deficit that organizations must acknowledge and address. This gap is not accidental; it reflects fundamentally different perspectives on AI’s risks and benefits, shaped by different stakes in AI outcomes.

The Job Displacement Disconnect: A Critical Perception Gap

Perhaps no single issue better illustrates the perception divide than attitudes toward AI-driven job displacement. The research reveals that approximately 50% of the public fears job losses due to AI adoption, while only 20% of corporate leaders share this concern. This 30-percentage-point differential has significant implications for change management, workforce planning, and organizational communications.

Understanding the Job Impact Debate

| Perspective | Job Impact View | Underlying Assumptions | Risk Assessment |

|---|---|---|---|

| Corporate Leaders (20% concerned) | AI will augment rather than replace human workers | Historical technology transitions created more jobs than destroyed; AI enables upskilling | Low risk – manageable through training and transition support |

| General Public (50% concerned) | AI threatens existing employment across multiple sectors | Automation historically displaced workers; AI accelerates this trend dramatically | High risk – threatens livelihoods and economic security |

| Investors (~40% concerned) | Job displacement presents reputational and operational risks | Workforce transitions require significant investment; poorly managed displacement damages brands | Medium risk – material impact on corporate performance and ESG ratings |

The Reality of AI’s Workforce Impact

Evidence suggests the truth lies somewhere between extreme optimism and severe pessimism. AI will indeed eliminate certain job categories while creating new ones, with the net effect varying significantly by industry, geography, and organizational approach. What matters most is how organizations manage this transition.

Key Workforce Transformation Insights:

- Task-Level vs. Job-Level Impact: Most AI implementations affect specific tasks rather than entire jobs, meaning roles will transform rather than disappear

- Skill Premium Shift: Demand is increasing for workers who can effectively collaborate with AI systems, requiring significant reskilling investments

- Industry Variation: Impact varies dramatically across sectors, with knowledge work, customer service, and routine cognitive tasks most affected

- Geographic Disparities: Job impact concentrated in specific regions and demographics, creating localized economic disruption

- Timeline Uncertainty: Rate of adoption and displacement remains uncertain, influenced by technical, economic, and regulatory factors

The Sustainability Imperative: Where Investors and Public Align

One of the study’s most significant findings is that investors demonstrate greater concern about AI’s environmental impact than corporate leaders. This alignment between investor priorities and public concerns creates both pressure and opportunity for enterprises.

The Environmental Cost of AI

| Environmental Factor | AI Impact | Scale of Concern | Mitigation Strategies |

|---|---|---|---|

| Energy Consumption | Training large models requires massive compute power and electricity | Critical – Growing exponentially with model size | Renewable energy, efficient architectures, model optimization |

| Water Usage | Data center cooling consumes significant water resources | High – Particularly acute in water-scarce regions | Advanced cooling technologies, location selection, water recycling |

| Carbon Footprint | AI operations generate substantial greenhouse gas emissions | High – Contradicts corporate climate commitments | Carbon offsets, renewable energy procurement, emission tracking |

| Electronic Waste | Rapid hardware obsolescence creates disposal challenges | Medium – Compounding with AI growth | Circular economy practices, hardware longevity, responsible disposal |

| Resource Extraction | Rare earth minerals for AI hardware have environmental costs | Medium – Supply chain sustainability concern | Ethical sourcing, material efficiency, alternative technologies |

The Corporate Sustainability Gap

The research reveals a troubling disconnect between investor/public expectations and corporate action. Only 17% of corporate leaders currently account for sustainability in their AI deployments, with 42% excluding environmental considerations entirely. This represents a significant strategic vulnerability for several reasons:

Why Sustainability in AI Matters:

- Investor Pressure: ESG-focused investors increasingly demand environmental accountability in AI deployment

- Regulatory Trajectory: Emerging regulations will likely mandate AI environmental impact disclosure and reduction

- Reputational Risk: Public scrutiny of AI’s environmental cost growing, particularly among younger consumers and employees

- Operational Efficiency: Sustainable AI practices often correlate with cost optimization and operational excellence

- Competitive Differentiation: Early movers in sustainable AI can capture market share and talent advantage

The Training and Workforce Development Consensus

One area where all three stakeholder groups demonstrate strong alignment is the critical importance of AI training and workforce development. An overwhelming 97% of investors and 90% of the public agree that AI training for workers is now essential, yet corporate execution varies significantly.

| Training Focus Area | Current Adoption | Required Investment | Business Impact |

|---|---|---|---|

| AI Literacy Programs | 35% of organizations with comprehensive programs | $500-$2,000 per employee annually | Foundational – Enables basic AI understanding and adoption |

| Technical Skill Development | 55% offering technical AI training to relevant roles | $3,000-$15,000 per technical employee | High – Creates AI development and deployment capabilities |

| AI-Human Collaboration | 25% with structured collaboration training | $1,000-$5,000 per knowledge worker | Critical – Maximizes AI productivity benefits |

| Ethical AI Use | 40% providing ethics and responsible AI training | $500-$2,000 per employee | Essential – Mitigates risks and builds trust |

| Leadership AI Strategy | 60% training executives on AI implications | $5,000-$25,000 per executive | Strategic – Drives informed decision-making |

Building Comprehensive AI Training Programs

Best Practices for AI Workforce Development:

- Role-Based Learning Paths: Customize training based on job functions, from basic literacy for all employees to deep technical skills for data scientists and engineers

- Hands-On Application: Move beyond theoretical knowledge to practical, job-relevant AI tool usage and problem-solving

- Continuous Learning Culture: Establish ongoing education programs recognizing AI’s rapid evolution requires sustained skill development

- Internal Certification Programs: Create credential systems recognizing AI competency and creating career advancement pathways

- Cross-Functional Collaboration: Foster interaction between technical and business teams to build shared understanding and capabilities

- External Partnership: Leverage relationships with technology vendors, universities, and training providers for specialized expertise

- Measurement and Iteration: Track training effectiveness through competency assessments, productivity metrics, and AI adoption rates

AI Safety: Divergent Priorities and Risk Perceptions

While all stakeholder groups agree that AI safety is a priority, they disagree significantly on which risks deserve the most attention and resources. Understanding these divergent priorities is essential for developing AI governance frameworks that address legitimate concerns across constituencies.

Risk Priority Matrix by Stakeholder Group

| Risk Category | Corporate Leaders | Investors | General Public |

|---|---|---|---|

| Disinformation & Misinformation | Highest Priority | High Priority | High Priority |

| Malicious Use & Cybersecurity | Highest Priority | High Priority | Medium Priority |

| Environmental Impact | Low Priority | Highest Priority | Highest Priority |

| Job Displacement | Low Priority | Medium Priority | Highest Priority |

| Loss of Human Control | Medium Priority | Medium Priority | High Priority |

| Privacy Violations | High Priority | High Priority | Highest Priority |

| Algorithmic Bias & Discrimination | Medium Priority | High Priority | High Priority |

| Competitive Pressure & Market Concentration | High Priority | Highest Priority | Low Priority |

The public’s approach to AI risk is notably more egalitarian, viewing all risks as equally concerning. This contrasts with corporate leaders’ more targeted focus on specific business-relevant threats like disinformation and malicious use. This divergence suggests that corporate risk frameworks may underweight concerns that matter deeply to employees, customers, and communities.

The Regulatory Divide: Corporate Hesitation vs. Public Demand

The research identifies a significant gap between public expectations for AI regulation and corporate preferences for self-governance. This regulatory divide will shape the AI policy landscape and require careful navigation by enterprise leaders.

| Regulatory Approach | Corporate Leaders | Investors | General Public |

|---|---|---|---|

| Comprehensive AI Regulation | 25% support | 60% support | 75% support |

| Sector-Specific Rules | 55% support | 65% support | 55% support |

| Risk-Based Frameworks | 70% support | 75% support | 50% support |

| Industry Self-Regulation | 60% support | 35% support | 20% support |

| International Standards | 50% support | 70% support | 60% support |

Regulatory Trend Analysis:

The trajectory is clear: comprehensive AI regulation is coming, driven by public demand and investor pressure. Corporate leaders who position their organizations proactively—embracing transparency, ethical frameworks, and robust governance—will be better positioned when mandatory requirements emerge. Those waiting for policy clarity may find themselves scrambling to catch up.

Strategic Implications for Enterprise Leaders

The perception gaps revealed by this research create both challenges and opportunities for organizations implementing AI strategies. Forward-thinking leaders can leverage these insights to develop more sustainable, trusted, and ultimately successful AI initiatives.

The Strategic Opportunity: Competitive Differentiation Through Responsible AI

The research identifies several areas where corporate investment lags behind investor and public expectations. As the report concludes, these gaps “represent key opportunities for impact, leadership, and competitive differentiation.” Organizations that address these underinvestment areas can capture strategic advantages:

| Opportunity Area | Current Corporate Investment | Stakeholder Expectation | Strategic Value |

|---|---|---|---|

| Environmental Sustainability | Low (17% accounting for it) | High (Investors most concerned) | First-mover advantage, ESG rating improvement, regulatory preparation |

| Workforce Training & Support | Moderate (Variable quality) | Very High (97% investor priority) | Talent retention, productivity gains, employee engagement |

| Transparency & Accountability | Low to Moderate | High (Public and investor demand) | Trust building, brand differentiation, risk mitigation |

| Ethical AI Frameworks | Moderate (40% with formal programs) | High (All stakeholders concerned) | Regulatory preparedness, customer confidence, talent attraction |

| Community Impact Assessment | Low (Rarely considered) | Medium to High (Public priority) | Social license to operate, local stakeholder support |

Building a Stakeholder-Aligned AI Strategy

Framework for Bridging the AI Perception Gap:

1. Transparent Communication Strategy

- Regularly disclose AI deployments, use cases, and impact assessments to all stakeholders

- Provide clear explanations of AI decision-making processes in customer-facing applications

- Share environmental impact data and sustainability commitments for AI operations

- Communicate workforce transition plans and support programs proactively

2. Comprehensive Workforce Investment

- Allocate 3-5% of AI budget specifically to training and workforce development

- Create clear career pathways for employees in AI-augmented roles

- Implement job transition assistance for positions significantly affected by AI

- Develop internal AI skills marketplaces connecting opportunities with trained employees

3. Sustainability Integration

- Establish AI sustainability metrics and include in executive performance evaluations

- Set carbon reduction targets for AI operations aligned with corporate climate commitments

- Invest in energy-efficient AI architectures and renewable energy for compute infrastructure

- Participate in industry sustainability initiatives and best practice sharing

4. Robust Governance Frameworks

- Create AI ethics boards with diverse stakeholder representation

- Implement algorithmic impact assessments for high-stakes AI applications

- Establish clear accountability structures for AI outcomes

- Develop incident response protocols for AI failures or unintended consequences

5. Stakeholder Engagement Programs

- Conduct regular surveys of employee, customer, and community AI perceptions

- Create advisory councils including public representatives for major AI initiatives

- Host town halls and listening sessions addressing AI concerns

- Partner with educational institutions and community organizations on AI literacy

Investor Pressure as a Catalyst for Change

One of the study’s most significant findings is the closer alignment between investor priorities and public concerns compared to corporate leadership priorities. This creates pressure for organizational change from capital providers who increasingly evaluate companies through ESG and responsible AI lenses.

The Investor-Public Alliance

| Shared Priority | Why Investors Care | Why Public Cares | Corporate Response Required |

|---|---|---|---|

| Environmental Sustainability | ESG ratings, regulatory risk, long-term viability concerns | Climate change, resource preservation, local environmental impact | Carbon accounting, renewable energy transition, efficiency investments |

| Workforce Treatment | Human capital management, talent retention, productivity sustainability | Job security, fair treatment, economic opportunity | Comprehensive training, transition support, transparent communication |

| Risk Management | Financial stability, reputational protection, operational resilience | Safety, privacy, prevention of harmful outcomes | Robust governance, ethical frameworks, accountability mechanisms |

| Transparency | Informed investment decisions, materiality assessment, governance evaluation | Understanding AI impact, building trust, enabling informed choices | Regular disclosure, accessible reporting, stakeholder engagement |

This investor-public alignment creates both pressure and opportunity. Companies responding proactively can access capital more easily and at better terms while building public trust. Those ignoring these aligned priorities risk both financial and reputational consequences.

Industry-Specific Considerations

The perception gap and its implications vary significantly across industries based on AI adoption patterns, workforce impacts, and stakeholder sensitivities.

| Industry Sector | Primary AI Applications | Key Stakeholder Concerns | Strategic Priorities |

|---|---|---|---|

| Financial Services | Fraud detection, credit scoring, trading, customer service | Algorithmic bias, explainability, privacy, job displacement | Transparent AI governance, bias testing, regulatory compliance |

| Healthcare | Diagnosis, treatment planning, drug discovery, administrative automation | Patient safety, data privacy, clinician displacement, liability | Clinical validation, privacy protection, augmentation focus |

| Retail & E-commerce | Personalization, inventory, pricing, customer service | Privacy, manipulation concerns, worker impacts, sustainability | Ethical personalization, workforce transition, green computing |

| Manufacturing | Quality control, predictive maintenance, supply chain, robotics | Job displacement, safety, skill requirements, local economic impact | Workforce reskilling, safety systems, community engagement |

| Technology | Product development, operations, customer support, content moderation | Market concentration, environmental impact, societal effects | Responsible innovation, sustainability leadership, ethical standards |

Practical Recommendations for Enterprise Leaders

30-60-90 Day Action Plan for Addressing the AI Perception Gap:

First 30 Days: Assessment and Baseline

- Survey employees, customers, and relevant community stakeholders on AI perceptions and concerns

- Conduct gap analysis between current AI practices and stakeholder expectations

- Review sustainability footprint of existing AI deployments

- Assess workforce training programs against industry benchmarks

- Map AI governance structures and identify gaps in accountability

Days 31-60: Strategy Development

- Develop comprehensive stakeholder engagement strategy addressing identified concerns

- Create AI sustainability roadmap with measurable targets and timelines

- Design expanded workforce training program incorporating role-based learning paths

- Establish or enhance AI ethics governance structures with diverse representation

- Draft transparent AI disclosure framework for internal and external communication

Days 61-90: Initial Implementation

- Launch pilot stakeholder engagement initiatives (town halls, advisory councils)

- Begin sustainability quick wins (renewable energy procurement, efficiency improvements)

- Roll out first phase of enhanced training programs to priority groups

- Implement initial AI governance improvements and policy updates

- Publish first stakeholder communication on responsible AI commitments

Measuring Success: KPIs for Bridging the Perception Gap

| Measurement Category | Key Performance Indicators | Target Benchmarks |

|---|---|---|

| Stakeholder Perception | Employee AI confidence scores, customer trust ratings, community sentiment analysis | Year-over-year improvement of 15-25% toward leader/investor optimism levels |

| Workforce Readiness | Training completion rates, AI competency assessments, tool adoption metrics | 90%+ completion of core training, 70%+ competency achievement |

| Sustainability Progress | AI carbon footprint, energy efficiency gains, renewable energy percentage | 25-40% emissions reduction over 3 years, 100% renewable by 2030 |

| Governance Maturity | Ethics review coverage, incident response time, audit findings | 100% high-risk AI covered, <24hr incident response, declining audit issues |

| Business Outcomes | AI ROI, productivity gains, innovation metrics, talent retention | 20%+ productivity improvement, top-quartile innovation, reduced turnover |

Conclusion: Leading the Responsible AI Transformation

The perception gap between corporate leaders, investors, and the general public regarding AI represents more than a communication challenge—it signals fundamental differences in priorities, risk assessment, and values that enterprises must navigate strategically. Organizations that dismiss public skepticism as uninformed resistance miss critical signals about emerging risks, changing stakeholder expectations, and regulatory trajectories.

Conversely, companies that proactively address the concerns driving public hesitation—environmental sustainability, workforce displacement, loss of control, and privacy—can capture significant competitive advantages. By aligning AI strategies more closely with investor and public expectations, these organizations build trust, attract capital and talent, prepare for inevitable regulation, and create more sustainable AI implementations.

The research makes clear that underinvestment in environmental sustainability, workforce support, and transparent governance represents both a vulnerability and an opportunity. The companies that will thrive in the AI era are not necessarily those with the most aggressive adoption strategies, but those that deploy AI responsibly, transparently, and with genuine attention to stakeholder concerns.

As AI continues transforming industries and societies, the perception gap documented in this research will likely narrow—but the direction of convergence remains uncertain. Will corporate leaders and the public meet in mutual optimism as AI benefits materialize and risks are managed effectively? Or will disappointment and unintended consequences vindicate current skepticism? The answer depends largely on decisions enterprise leaders make today about how to develop, deploy, and govern AI systems.

How Artezio Can Help Navigate the AI Perception Gap

At Artezio, we specialize in helping enterprises develop and implement AI strategies that balance innovation with responsibility, performance with sustainability, and competitive advantage with stakeholder trust. Our comprehensive services include:

- AI Strategy Development: Craft comprehensive AI strategies aligned with stakeholder expectations and business objectives

- Responsible AI Implementation: Deploy AI systems with built-in ethical frameworks, transparency mechanisms, and accountability structures

- Workforce Transformation Programs: Design and deliver comprehensive training initiatives preparing employees for AI-augmented work

- Sustainability Integration: Optimize AI architectures for energy efficiency and environmental sustainability

- Governance Framework Development: Establish robust AI governance structures including ethics boards, impact assessments, and oversight mechanisms

- Stakeholder Engagement Strategy: Develop communication and engagement programs building trust with employees, customers, investors, and communities

- Technology Implementation: Build and deploy AI solutions with enterprise-grade quality, security, and responsible design

- Change Management: Guide organizational transformation ensuring smooth adoption and minimizing workforce disruption

Contact Artezio today to discuss how we can help your organization bridge the AI perception gap while maximizing the business value of artificial intelligence. Our expert team combines deep technical capabilities with strategic insight to deliver AI implementations that satisfy both business objectives and stakeholder expectations.

Recent Posts

- The Foundation Crisis: Why Hiring AI Specialists Before Data Engineers is Setting Companies Up for Failure

- ERP vs CRM vs Custom Platform: What Does Your Business Actually Need?

- The AI Enthusiasm Gap: Bridging Corporate Optimism with Public Skepticism in Enterprise AI Adoption

- The Future of AI-Powered Development: How Cursor Plans to Compete Against Tech Giants

- How Much Does Custom Software Development Cost in 2025? Real Numbers & Breakdown