- Services

- Artificial Intelligence Development

- Deep Learning & Neural Network Development Services

- Professional Machine Learning Development Services

- Enterprise Computer Vision Development Services

- Enterprise Natural Language Processing Development Services

- Chatbot & Conversational AI Development Services for Business

- Enterprise Computer Vision Solutions For Healthcare

- Transformative Healthcare AI Development

- Retail & E-commerce AI Solutions for Personalization & Growth

- AI Integration & MLOps Development Services

- AI Agent Development & Intelligent Automation

- Generative AI Solutions

- Outsourced Product Development

- Custom Software Development

- Software Customization & Integration

- Mobile App Development

- Custom Application Development

- Software Architecture Consulting

- Enterprise Application Development

- AI-Powered Documentation Services

- Product Requirements Document Services

- Artificial Intelligence Development

- Industries

- Healthcare Software Development

- Telemedicine Software Development

- Medical Software Development

- Electronic Medical Records

- EHR Software Development

- Remote Patient Monitoring Software Development

- Healthcare Mobile App Development Services

- Medical Device Software Development

- Healthcare Mobile App Development Services

- Patient Portal Development Services

- Practice Management Software Development

- Healthcare AI/ML Solutions

- Healthcare CRM Development

- Healthcare Data Analytics Solutions Development

- Hospital Management System Development | Custom HMS & Healthcare ERP

- Mental Health Software Development Services

- Medical Billing & RCM Software Development | Custom Healthcare Billing Solutions

- Laboratory Information Management System (LIMS) Development

- Clinical Trial Management Software Development

- Pharmacy Management Software Development

- Finance & Banking Software Development

- Retail & Ecommerce

- Fintech & Trading Software Development

- Online Dating

- eLearning & LMS

- Cloud Consulting Services

- Healthcare Software Development

- Technology

- Products

- About

- Contact Us

Prompt Engineering for Product Managers: Ship Faster with Better Prompts

People increasingly expect product teams to ship AI features fast. But speed without control leads to brittle experiences, unexpected costs, and user trust problems. Prompt engineering—treating prompts as product artifacts you design, test, and version—lets PMs move faster while keeping quality predictable.

This guide explains a practical, non-technical approach to prompt engineering for product teams. You’ll learn how to build a prompt library MVP, run lightweight prompt tests, manage prompt versions, and govern prompts across workflows. At the end are five ready-to-use prompt templates you can drop into an MVP.

Why PMs should own prompt engineering (short answer)

-

Prompts are product UX. The words you ask the model with shape user output, tone, and safety. That’s product design territory.

-

Prompts determine cost & performance. Small prompt changes can dramatically change token usage and latency. PM tradeoffs matter.

-

Prompts contain business logic. Many product rules live only in prompts (e.g., “always prioritize premium users”). That needs consistent governance.

-

Prompts need reproducibility. If a prompt produces a bad answer in production, you must be able to reproduce the exact prompt + model + context to fix it.

If you’re shipping AI features, make prompt engineering part of your product process—not a one-off craft performed in notebooks.

What is a prompt library MVP?

A prompt library MVP is a minimal, discoverable collection of vetted prompts, organized for reuse across product flows. It contains:

-

Prompt text (exact string or template)

-

Intent / purpose (what the prompt does)

-

Expected output format or schema (so downstream code can parse reliably)

-

Test cases (examples that should pass)

-

Version and author metadata

The goal is to deliver repeatable results for your feature while keeping iteration cheap.

Start with outcomes, not wording

Before writing prompts, clarify the product outcome in one sentence. Example formats:

-

“Reduce first-reply time for support triage by 30%”

-

“Auto-suggest 3 product recommendations that increase add-to-cart rate”

-

“Extract invoice number, total, and due date from uploaded PDFs into JSON”

When you know the measurable outcome, you can write prompts that directly support it and design tests to measure success.

Day-to-day prompt engineering workflow for PMs

You don’t need to be a prompt scientist. Use this practical daily workflow:

-

Define the intent. What should the model accomplish, in measurable terms?

-

Draft a template. Write a clear prompt that includes instructions, expected schema, and constraints.

-

Add examples. Provide 1–3 examples (few-shot) when appropriate.

-

Test quickly. Run the prompt on 10 representative examples; record outputs.

-

Add verification. If output must be structured, enforce a JSON schema or use a verifier step.

-

Save in the library. Add metadata and test results; tag by use case and owner.

-

Version and roll out. Keep a changelog and release via feature flags.

Treat prompts as product features that need small iterations, not magic phrases you hope will generalize.

Building your prompt library MVP: practical steps

Here’s a small, repeatable plan you can set up in a day or two.

1) Choose storage & access

Minimal options:

-

Single Google Doc for earliest MVP. Use sections for each prompt and a table of contents.

-

Airtable / Notion / Confluence for searchable metadata and attachments.

-

Git + JSON/YAML for engineering-aligned teams — prompts become code artifacts.

Recommendation for PMs: start in Notion or Airtable for discoverability; export to Git when you move to production.

2) Define a prompt record template

Each prompt entry should include:

-

ID / slug (e.g.,

support-triage-001) -

Title (human friendly)

-

Intent / Why (one sentence)

-

Prompt template (exact string; use placeholders)

-

Output schema (JSON keys, types)

-

Sample inputs & expected outputs (3 examples)

-

Model & parameters (model name, temperature, max tokens)

-

Owner (product owner or author)

-

Version & changelog

-

Tags (use case, channel, risk level)

-

Test results / notes

Store this as a form so contributors can add prompts fast.

3) Naming & folder conventions

Use a consistent naming convention so prompts are discoverable:

<domain>/<intent>/<short-slug>/v1

e.g. support/triage/extract_intent/v1

Folder structure (Notion/Airtable fields) helps PMs and engineers find what they need.

4) Start with a 10-prompt core

Identify the top 10 prompts that power most flows (e.g., intent classification, extraction, summarization, reply suggestion). Build and test those first.

Prompts as product UI: designing for users

When prompts affect what users see, treat them as UX elements:

-

Be explicit about tone. If the product voice is friendly, include “Respond in a friendly, concise tone.”

-

Give tight constraints. Tell the model exactly what to include and what to omit.

-

Provide examples of bad output. If certain reponses are unacceptable, show examples to the model (“Don’t say X…”).

-

Show provenance. When factual claims matter, show “Source: page X” or “Confidence: high/medium/low”.

Example instruction fragment:

“You are an assistant for Acme Support. Provide a 1–2 sentence summary, cite the source paragraph, and output JSON with keys: intent, confidence (0–1), suggested_reply.”

Design your prompts so the UI can render the output without guesswork.

Prompt templates: use placeholders & minimal templating

Avoid fully unstructured prompts. Use templates with placeholders that your app fills in:

Prompt:

"You are a support triage assistant. A user says: {{user_message}}.

Return JSON:

{

"intent": "<one of [billing, shipping, technical, account]>",

"confidence": 0.0,

"reason": "brief explanation (max 20 words)"

}"

Placeholders reduce variability and make it easy to log inputs/outputs for QA.

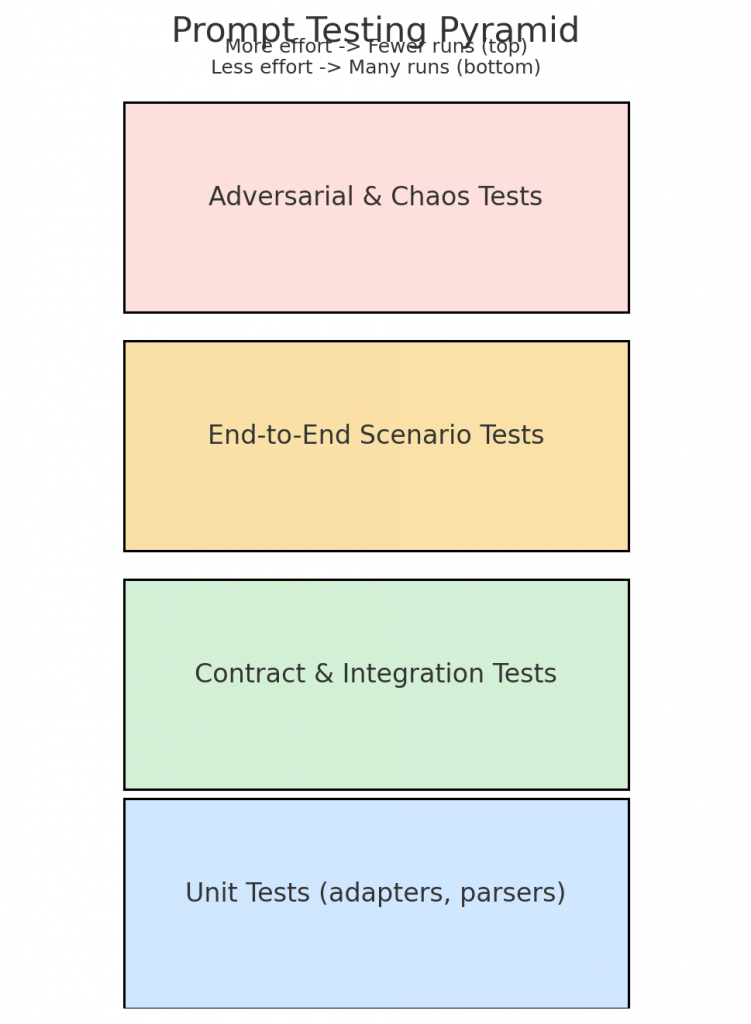

Prompt testing: lightweight, reproducible, and automated

Testing is the heart of stability. Here are practical test types:

1) Unit-like tests (deterministic checks)

-

Run the prompt on curated inputs and assert output schema is valid (JSON parseable, required fields present).

-

Example test: for 50 examples,

intentmust be in set andconfidencenumeric.

Automate these with a small script or CI job.

2) Scenario tests (end-to-end)

-

Simulate a full user flow: input → prompt → app logic → UI rendering.

-

Compare final UI result to expected behavior (tolerate some variation if natural language is involved).

3) Regression tests

-

Save “golden” outputs for core examples and fail builds if outputs deviate beyond acceptable thresholds (use fuzzy matching for text).

4) Adversarial & edge-case tests

-

Feed messy or malicious inputs to find failures (prompt injection, shocking text, truncated inputs).

5) A/B tests with real users

-

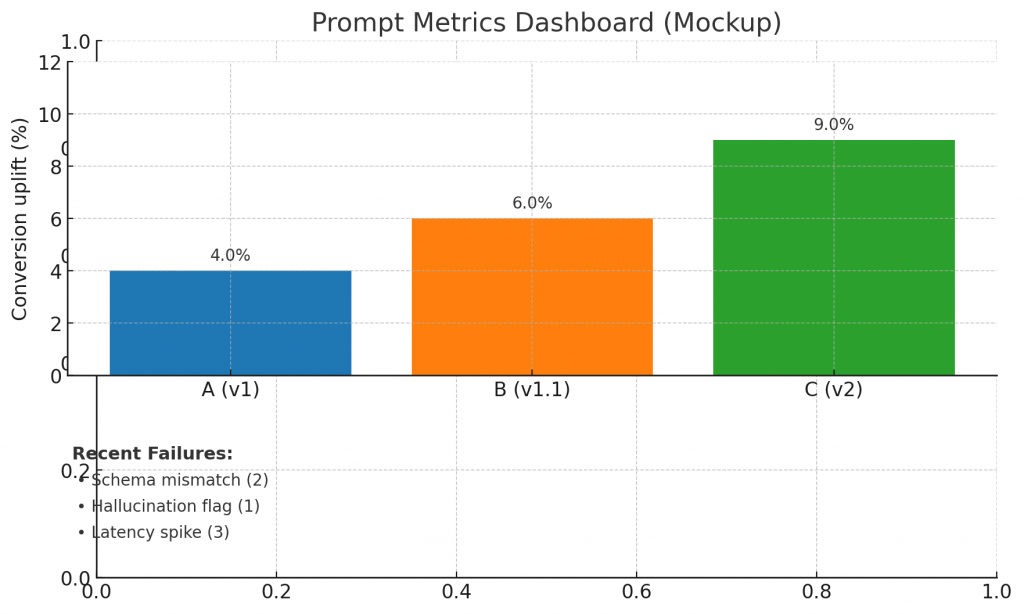

Run multiple prompt variants behind flags and measure product KPIs (conversion, time-to-task, NPS).

Quick tooling: start with a small Node/Python test harness that logs prompt + model + response to CSV and runs assertions. Hook it into CI to execute a daily smoke suite.

Versioning prompts: keep a changelog, not just files

Prompts should evolve. Versioning helps you reproduce incidents and roll back:

-

Semantic versioning for prompts:

v1,v1.1,v2. Minor edits bumppatchorminor; behavior-changing changes bumpmajor. -

Changelog: each version includes

whyandwhat changed. -

Model binding: record the exact model and parameters used (e.g.,

gpt-4o-mini,temperature=0.2). -

Immutable historic runs: save the prompt text used for each production run in logs (never just a reference).

Practical tip for PMs: keep the current prompt in Notion/Airtable and mirror a copy in Git when you release to engineering. Treat releases as product releases.

Guardrails & verification: make prompts safer

Never assume the model output is correct. Add programmatic guards:

-

Schema enforcement: require the model to return strict JSON and validate it. If validation fails, fallback to safe reply or ask a clarification question.

-

Sanity checks: numeric ranges, date formats, ID patterns.

-

Cross-checks: re-run a short verifier prompt that checks the first output’s facts against known sources.

-

Human approval gates: for high-impact actions, require a human with a simple approve/reject UI that includes the prompt and model reasoning.

-

Rate limiting & cost caps: set usage limits per user/session to avoid runaway bills.

Design guarding layers as product features: users can see “Suggested reply — Needs approval” vs “Auto-reply sent”.

Observability: prompt provenance, failures, and cost

Track these fields for each prompt execution:

-

Prompt text (exact)

-

Prompt template ID and version

-

Model + parameters (model name, temperature, max tokens)

-

Contextual retrieval docs used (if RAG)

-

Response text + parsed JSON

-

Latency and token usage (for cost)

-

Validation status (passed/failed schema checks)

-

User action (accepted/edited/ignored)

Store these logs in a searchable store (Elastic, BigQuery, or even CSV initially). Use them to spot degradation, measure drift, and attribute problems to prompt changes or model upgrades.

Organizing prompt libraries for product workflows

Group prompts into folders/tags that match product flows and ownership:

-

By flow: onboarding, support triage, product recommendations.

-

By channel: web widget, email, mobile, voice.

-

By risk: low/medium/high (e.g., “payment authorizations” = high).

-

By owner: product, legal, security teams.

Create a README at the top level that explains how to pick a prompt and how to add tests.

Collaboration: PM + designer + engineer loop

Prompt engineering is cross-functional. Suggested collaboration cadence:

-

PM drafts intent & acceptance criteria.

-

Designer specifies UX affordances and error states.

-

Engineer implements template, parsing, and tests; adds schema validation.

-

QA builds scenario tests & edge cases.

-

Legal/Security reviews for risky language or regulatory exposure.

Run a short weekly review of prompt changes. Keep the review lightweight—prompts are small and best iterated quickly.

Prompt rollout strategy: feature flags & canaries

Treat prompt releases like code:

-

Local dev: iterate with synthetic data.

-

Internal canary: expose to internal staff only.

-

Beta user group: a small percentage of real users via feature flag.

-

Full rollout: if metrics & logs look good.

Always have a rollback plan: revert to previous prompt version or switch to a safe fallback handler.

Metrics that matter for prompt-driven features

Pick a small set of KPIs aligned with the product outcome:

-

Containment rate: % where model handled the task without human escalation.

-

Time saved / task time: measured before vs after agent.

-

Conversion uplift: business metric (signup, purchase) improved by prompt variant.

-

Error rate: schema failures or hallucinations per 1k runs.

-

Edit rate: % of auto-suggested replies edited by users (lower is better).

-

Cost per successful task: tokens + infra cost divided by successful completions.

Instrument these and show them in the weekly product dashboard.

Governance: who can change a prompt?

Define a simple governance model to avoid accidental harmful edits:

-

Editable by: owners (PMs/engineers) for low-risk prompts.

-

Requires review: prompts tagged

high-riskorlegal-sensitiveneed sign-off from security/legal. -

Audit & approvals: changes recorded with reason and linked test results.

-

Emergency rollback: anyone on the on-call list can revert a prompt if it causes harm.

Make the governance explicit in the library readme.

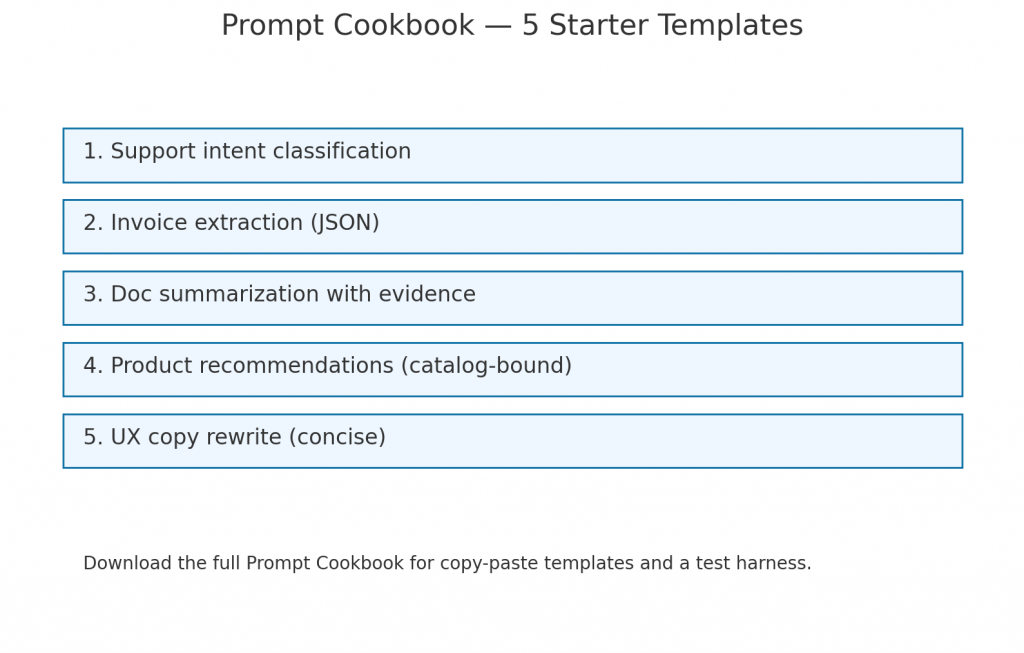

Prompt cookbook — 5 templates (copy, adapt, ship)

Below are five practical templates for common product workflows. Each includes a short description, prompt template, expected JSON schema and sample input/output.

Template 1 — Support intent classification (short reply suggestion)

Use case: classify user message intent and generate a short suggested reply.

Prompt template

You are SupportGPT for [ProductName]. A user message is below. Determine the user's intent (one of: billing, technical, account, other). Provide a short suggested reply (one or two sentences) and a confidence score between 0 and 1. Return valid JSON only.

User message:

"""{{user_message}}"""

Return JSON:

{

"intent": "<billing|technical|account|other>",

"confidence": 0.0,

"suggested_reply": "<one or two sentence reply>"

}

Schema

-

intentstring in allowed set -

confidencenumber 0.0–1.0 -

suggested_replystring

Example input

"My invoice shows a charge I don't recognize."

Example output

{

"intent": "billing",

"confidence": 0.92,

"suggested_reply": "I’m sorry about that charge — I can help. Can you share the invoice or last four digits so I can look it up?"

}

Template 2 — Structured extraction from uploads (invoices)

Use case: extract key fields from an invoice text.

Prompt template

You are an automated extractor. Given the OCR text of an invoice, return JSON with these keys: invoice_number (string), invoice_date (YYYY-MM-DD or null), total_amount (number), currency (ISO code, e.g., USD), vendor_name (string). If a value cannot be found, return null. Return JSON only.

Invoice text:

"""{{ocr_text}}"""

Schema

-

invoice_numberstring or null -

invoice_datestring (ISO) or null -

total_amountnumber or null -

currencystring or null -

vendor_namestring or null

Example input

"Invoice No: INV-2024-055 Date: 2024-03-12 Total: $1,295.50 Vendor: Acme Supplies"

Example output

{

"invoice_number": "INV-2024-055",

"invoice_date": "2024-03-12",

"total_amount": 1295.50,

"currency": "USD",

"vendor_name": "Acme Supplies"

}

Template 3 — Summarization with cited evidence (for docs)

Use case: summarize a document and cite the paragraph used.

Prompt template

You are a summarizer for product documentation. Read the document below and produce:

- a 2-3 sentence plain-language summary,

- a 1-line "key action" recommendation,

- the exact paragraph (or sentence) you used as evidence (copy verbatim) in the "evidence" field.

Return JSON only.

Document:

"""{{document_text}}"""

Schema

-

summarystring -

key_actionstring -

evidencestring

Example output

{

"summary": "This doc explains the new billing model where users are billed monthly per seat.",

"key_action": "Update the pricing page to include examples for teams of 5 and 10.",

"evidence": "Teams are billed monthly at $12 per seat; discounts apply for 10+ seats."

}

Template 4 — Product recommendation (short list)

Use case: recommend 3 relevant products based on user interests.

Prompt template

You are a product recommender. Given the user profile and recent actions, return a JSON array "recommendations" with up to 3 objects. Each object must include: id (string), title (short), reason (one sentence). Do not invent products—only recommend from the provided catalog JSON.

User profile:

{{user_profile_json}}

Catalog:

{{catalog_json}}

Return JSON only.

Schema

-

recommendations: array of{id, title, reason}

Example output

{

"recommendations": [

{"id":"p_201","title":"Pro Plan - 5 seats","reason":"You viewed team collaboration features and need admin controls."},

{"id":"p_305","title":"Analytics Add-on","reason":"You frequently export reports and would benefit from scheduled dashboards."}

]

}

Template 5 — Rewrite for tone and length (UX text)

Use case: rewrite UI copy to be concise and friendly.

Prompt template

Rewrite the following UI copy to be friendly, concise (max 20 words), and suitable for an onboarding tooltip. Keep key instructions. Return JSON only with key "rewritten".

Original:

"""{{ui_copy}}"""

Schema

-

rewrittenstring (max 20 words)

Example input

"Please enter the email address associated with your account in order to receive password reset instructions."

Example output

{"rewritten":"Enter your account email to get a password reset link."}

How to measure prompt quality quickly

For each prompt, track these lightweight metrics:

-

Success rate: % of runs passing schema & verification tests.

-

User acceptance: % of suggested replies accepted as-is.

-

Edit length: average number of characters changed when a user edits suggested text.

-

Time-to-complete: time for a user to accomplish the task with vs without the prompt.

-

Cost per run: tokens * price.

Log these weekly; use a simple dashboard in Sheets or Airtable at MVP stage.

Common pitfalls & how to avoid them

-

Overfitting to examples. Don’t hardcode prompts that only work on your test set. Use diverse inputs.

-

Tight coupling to model internals. If a model upgrade changes behavior, have a plan to roll back or re-tune. Always log model name/version.

-

No schema enforcement. Parsing free text is brittle—require JSON or a strict format for downstream systems.

-

No owner. If no one owns a prompt, it will change unpredictably. Assign a prompt owner.

-

Ignoring cost. Track token usage; set budgets and alerts.

Scaling the prompt library: next steps after MVP

When the idea proves out and you scale, consider:

-

Move prompts into Git: make them part of PRs and code reviews.

-

Add a prompt CI runner: run scenario tests automatically on PRs.

-

Centralize model adapters: so you can switch providers without changing prompts.

-

Automated A/B testing platform: compare prompt variants in production.

-

Governance automation: require test coverage and approvals for changes to high-risk prompts.

Final thoughts

Prompt engineering is a product discipline. When PMs treat prompts like features—designing them, testing them, versioning them, and measuring their impact—teams ship faster and with less surprise. A compact prompt library and a few automated checks will pay back quickly: fewer support escalations, more predictable UX, and faster iteration cycles.

Recent Posts

- The Foundation Crisis: Why Hiring AI Specialists Before Data Engineers is Setting Companies Up for Failure

- ERP vs CRM vs Custom Platform: What Does Your Business Actually Need?

- The AI Enthusiasm Gap: Bridging Corporate Optimism with Public Skepticism in Enterprise AI Adoption

- The Future of AI-Powered Development: How Cursor Plans to Compete Against Tech Giants

- How Much Does Custom Software Development Cost in 2025? Real Numbers & Breakdown