- Services

- Artificial Intelligence Development

- Deep Learning & Neural Network Development Services

- Professional Machine Learning Development Services

- Enterprise Computer Vision Development Services

- Enterprise Natural Language Processing Development Services

- Chatbot & Conversational AI Development Services for Business

- Enterprise Computer Vision Solutions For Healthcare

- Transformative Healthcare AI Development

- Retail & E-commerce AI Solutions for Personalization & Growth

- AI Integration & MLOps Development Services

- AI Agent Development & Intelligent Automation

- Generative AI Solutions

- Outsourced Product Development

- Custom Software Development

- Software Customization & Integration

- Mobile App Development

- Custom Application Development

- Software Architecture Consulting

- Enterprise Application Development

- AI-Powered Documentation Services

- Product Requirements Document Services

- Artificial Intelligence Development

- Industries

- Healthcare Software Development

- Telemedicine Software Development

- Medical Software Development

- Electronic Medical Records

- EHR Software Development

- Remote Patient Monitoring Software Development

- Healthcare Mobile App Development Services

- Medical Device Software Development

- Healthcare Mobile App Development Services

- Patient Portal Development Services

- Practice Management Software Development

- Healthcare AI/ML Solutions

- Healthcare CRM Development

- Healthcare Data Analytics Solutions Development

- Hospital Management System Development | Custom HMS & Healthcare ERP

- Mental Health Software Development Services

- Medical Billing & RCM Software Development | Custom Healthcare Billing Solutions

- Laboratory Information Management System (LIMS) Development

- Clinical Trial Management Software Development

- Pharmacy Management Software Development

- Finance & Banking Software Development

- Retail & Ecommerce

- Fintech & Trading Software Development

- Online Dating

- eLearning & LMS

- Cloud Consulting Services

- Healthcare Software Development

- Technology

- Products

- About

- Contact Us

How AI Is Changing the Economics of Custom Software

Executive summary

AI is no longer an experimental add-on — it is a multiplier that changes how we cost, staff, and architect software projects. For CTOs and product managers, the primary questions are practical: How does AI alter total cost of ownership (TCO) over a multi-year horizon? How much does it shrink time-to-market? When should we opt for AI-assisted development versus traditional engineering or buying a COTS product? And when do AI agents make sense as product features or internal automations?

This article provides a systematic framework to answer those questions: a TCO model that captures one-time and recurring costs, a time-to-market and feature velocity lens, ROI scenarios for typical product choices, and an actionable decision matrix to guide build/buy/assist choices. The article also covers where AI agents add value, how to measure ROI, and the common integration pitfalls product teams must plan for.

Throughout, you’ll find practical charts and tables (included above), and a downloadable TCO template to help you model your own scenarios. At the end: a short checklist to convert analysis into a product roadmap and a CTA to book a strategy call.

1. Why AI changes economics — the principal mechanisms

To understand the economics, start with mechanisms by which AI affects costs and speed.

-

Automation of repetitive work: LLMs and code-generation tools can produce boilerplate, scaffolding, and tests. This reduces the direct developer hours for routine tasks and lowers the marginal cost of each new feature.

-

Acceleration of prototyping: Rapid generation and iteration mean you can validate ideas quicker, reducing the cost of failed bets and shortening time-to-market for viable features.

-

New recurring costs: AI introduces new variable costs — model usage fees (token-based), vendor licenses, private model hosting, and specialized monitoring/ops. TCO must include these recurring costs.

-

Shift in role mix: The work shifts toward higher-value activities: architecture, prompt engineering, model selection, and verification. These roles command different rates and sometimes require new hires.

-

Operational overhead for safety and governance: Audit logs, verification layers, and security reviews add non-trivial costs that didn’t exist in a traditional CRUD app workflow.

-

Potential reduction in maintenance burden: If AI-produced code is used for prototypes only, and production code is refactored by senior engineers, maintenance can be lower; conversely, blindly promoting generated code can increase technical debt.

These mechanisms create both upside (faster delivery, lower initial labor) and downside (new recurring costs and governance overhead) — so a careful, multi-year TCO model is required.

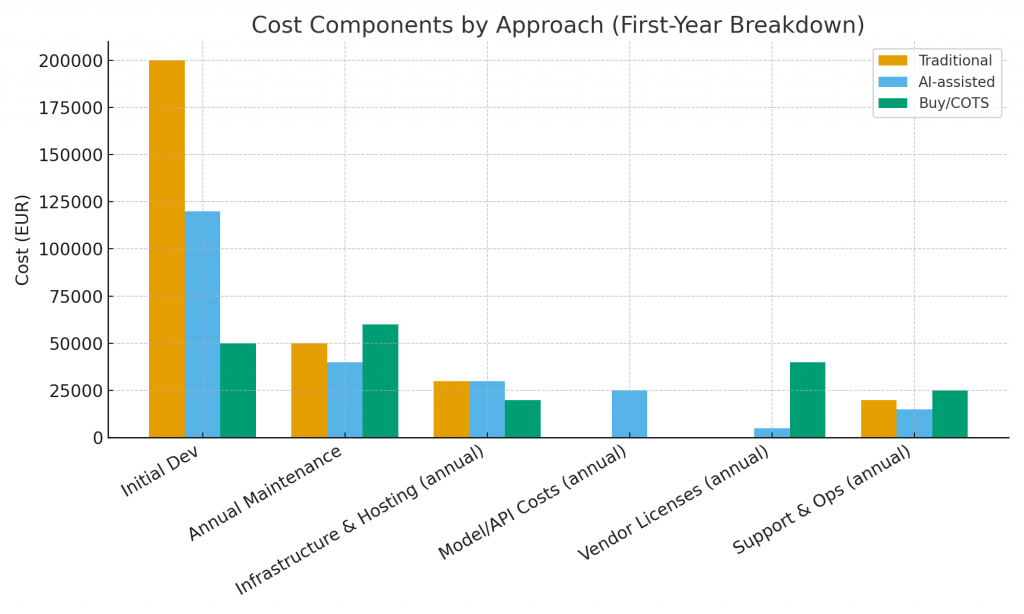

2. A practical TCO model for AI-influenced development

A TCO model should capture both one-time and recurring items, and project them over a planning horizon (commonly 3 years). Break costs into:

One-time (initial)

-

Requirement discovery & product design: PM, UX, domain research.

-

Initial development (MVP): engineering hours — for Traditional Dev, mostly human; for AI-assisted, a mix of human + model prompts + integration time.

-

Integration & onboarding: connecting to systems, compliance checks, connectors.

-

Migration & data prep: cleaning, schema changes, embedding pipelines for RAG patterns.

Recurring (annual)

-

Maintenance & bug-fixes: code updates, refactors.

-

Infrastructure & hosting: servers, storage, databases.

-

Model/API usage costs: token costs, fine-tuning, model hosting.

-

Vendor licenses & subscriptions: monitoring tools, vector DB, search services.

-

Support & operations: SREs, observability, incident response.

-

Security & compliance activities: audits, penetration tests, legal reviews.

Hidden / indirect costs

-

Training and ramp-up: internal training on prompt engineering and model governance.

-

Quality and verification: manual reviewers, HITL workflows, false positives/negatives mitigation.

-

Opportunity costs: delays in other projects while integrating AI tooling.

-

Technical debt risk premium: expected future cost to clean up generated code if not properly reviewed.

The downloadable TCO template you can use now includes these categories and sample defaults — adjust rates to your region and salary bands.

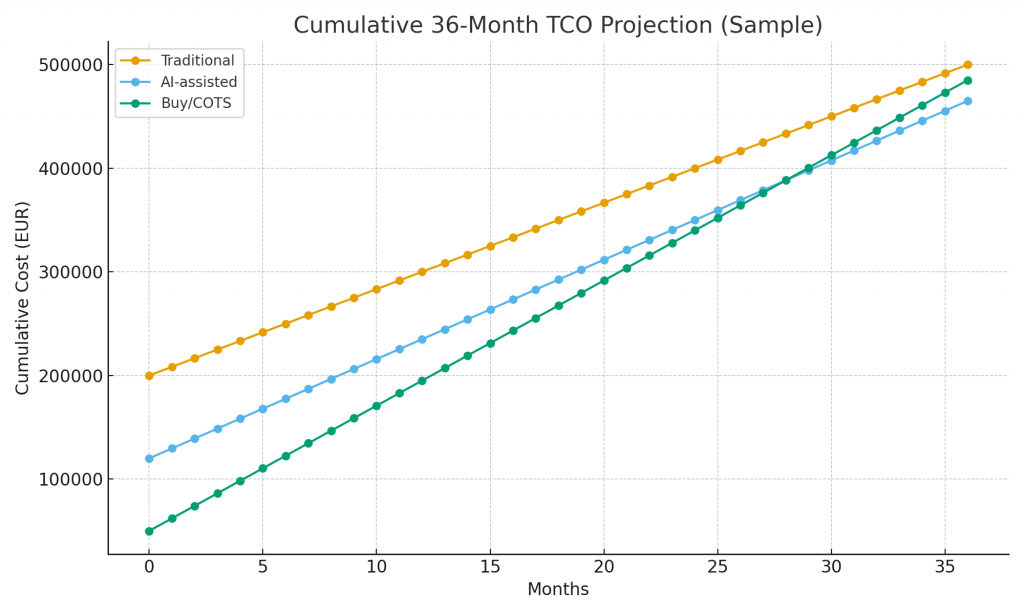

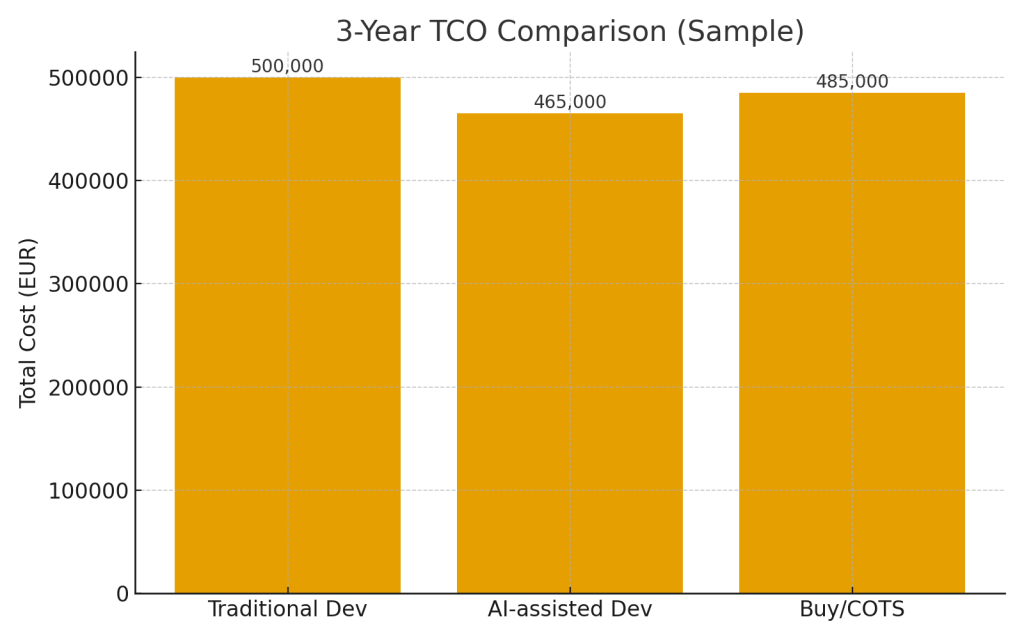

(Refer to the 3-Year TCO chart above for sample totals across approaches.)

3. Time-to-market: how AI compresses cycles (with caveats)

Time-to-market (TTM) is often the single most important commercial lever. AI primarily compresses TTM via:

-

Rapid scaffolding: Generating the initial codebase for common features like CRUD, auth scaffolding, or form validations.

-

Faster prototyping: Product teams can iterate on behavior and UX using AI-generated shells, enabling earlier user testing.

-

Automated test generation: LLMs can propose unit and integration tests, accelerating verification cycles.

However, compressing TTM is not free — it often shifts effort to earlier governance and later hardening phases. That tradeoff depends on the intended longevity of the feature:

-

Prototype -> throwaway: If the prototype is used purely for validation, AI can yield huge TTM gains with low downstream cost.

-

Prototype -> production: If the prototype will be promoted to production, factor in the cost to refactor, secure, and test the generated code to enterprise standards. This may reduce the TTM advantage.

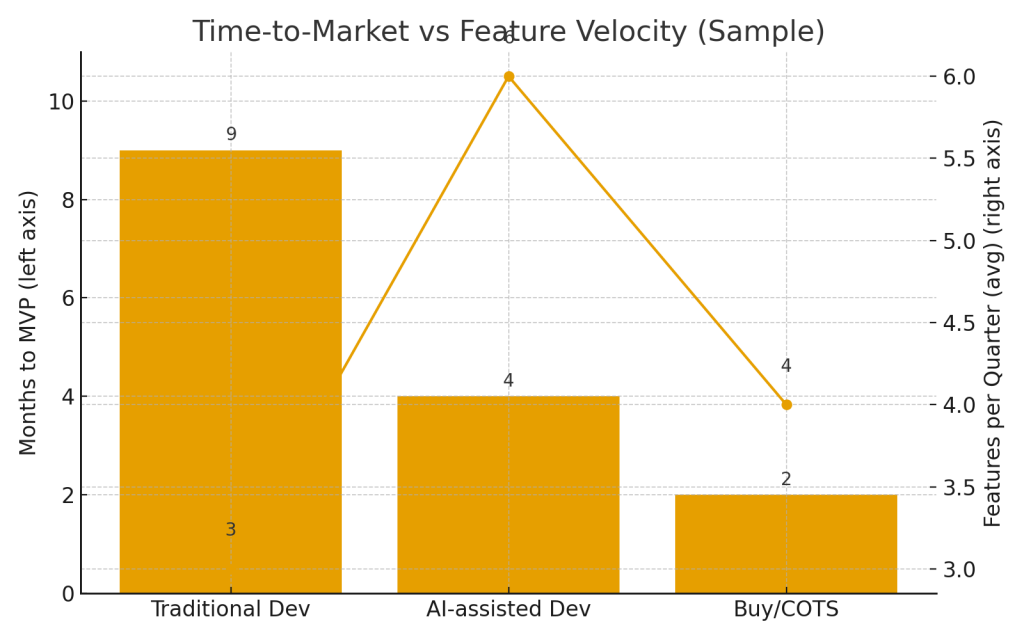

Use the “Months to MVP” chart above to compare typical ranges: Traditional Dev (6–12 months), AI-assisted Dev (3–6 months), Buy/COTS (1–3 months, depending on fit and customization).

4. Comparing approaches: Build (Traditional), Build+AI (AI-assisted), or Buy (COTS)

We recommend evaluating these options along three axes: Total Cost (3-year TCO), Time-to-Market, and Strategic Differentiation.

Traditional Build

-

When it fits: Highly differentiated core IP, strict regulatory requirements, need for long-term performance optimizations, or cases where model unpredictability is unacceptable.

-

Pros: Full control, predictable licensing, easier to ensure compliance.

-

Cons: Higher initial cost and longer TTM.

-

TCO characteristics: High initial dev cost, stable recurring infra/maintenance costs, limited vendor dependency.

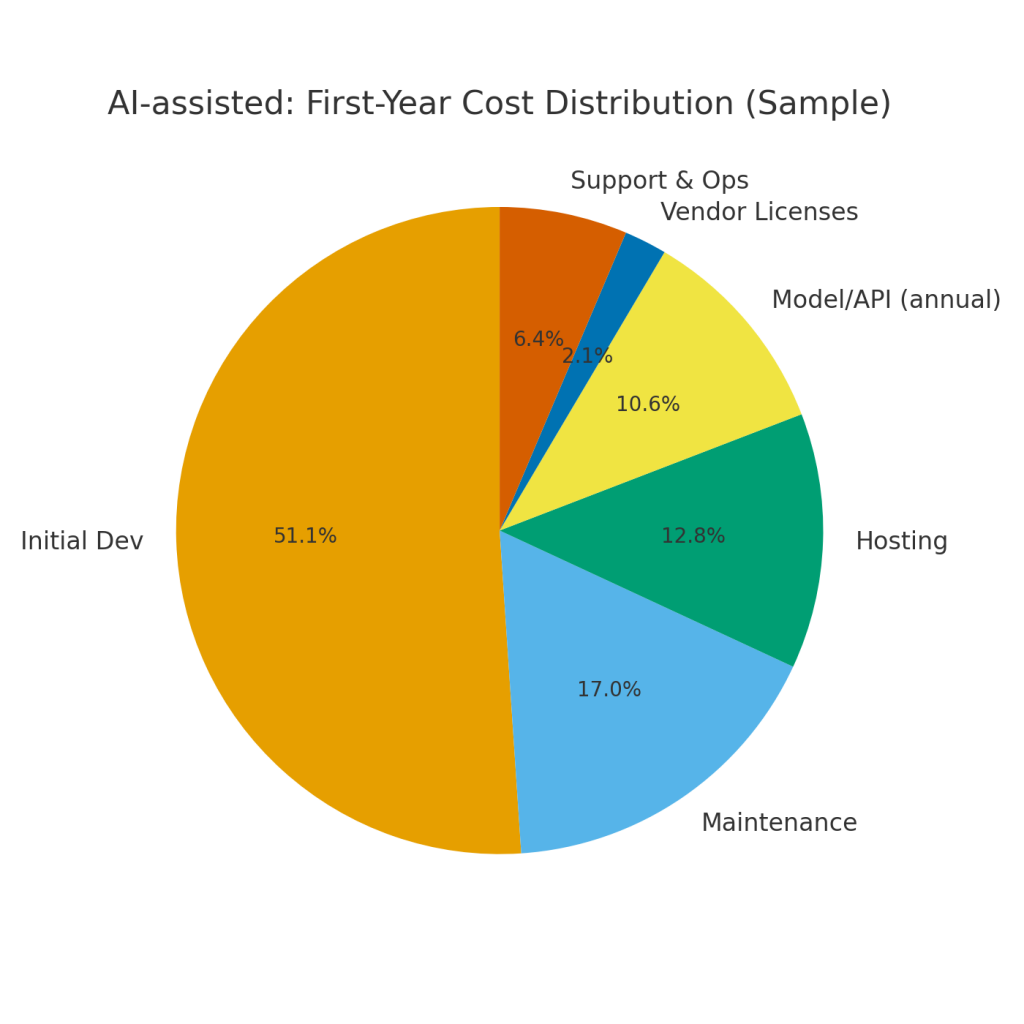

AI-assisted Build

-

When it fits: Faster prototyping, products where generative features add value, and internal automation with moderate sensitivity.

-

Pros: Lower initial hours, higher feature throughput, faster iteration.

-

Cons: Recurring model costs, governance overhead, additional verification requirements.

-

TCO characteristics: Lower initial dev cost, higher annual recurring costs (model usage, monitoring), moderate maintenance.

Buy / COTS

-

When it fits: Commodity features, well-supported vendor solutions match needs, constrained engineering bandwidth.

-

Pros: Quick TTM, lower upfront cost, vendor-hosted maintenance.

-

Cons: Limited customization, ongoing license cost, potential vendor lock-in.

-

TCO characteristics: Lower initial cost, predictable recurring licensing fees, lower engineering maintenance.

Refer to the decision matrix created earlier (downloadable) to map scenarios to recommended approaches.

5. Sample ROI scenarios — real numbers and interpretation

Below are three illustrative scenarios (numbers are examples; use the downloadable TCO template for your inputs).

Scenario A — Highly regulated customer portal (finance)

-

Traditional Dev: Initial €250k, annual €60k -> 3-year TCO ~ €430k.

-

AI-assisted Dev: Initial €160k (faster dev), annual €120k (monitoring, model costs) -> 3-year TCO ~ €520k.

-

Buy/COTS: Initial €50k (integration), annual license €150k -> 3-year TCO ~ €500k.

Interpretation: For regulated, high-trust systems, Traditional may be cheaper and safer over 3 years due to lower ongoing governance costs. AI-assisted accelerates time-to-market but can increase recurring costs because of monitoring and high-assurance verification needs.

Scenario B — Internal automation (invoice parsing)

-

Traditional Dev: Initial €120k, annual €40k -> 3-year TCO €240k.

-

AI-assisted Dev: Initial €60k, annual model costs €30k -> 3-year TCO €150k.

Interpretation: For internal automation with low external risk, AI-assisted is cost-effective: lower initial and total costs with faster deployment and immediate productivity gains.

Scenario C — Standard CRM integration

-

Traditional Dev: Initial €80k, annual €20k -> 3-year TCO €140k.

-

Buy/COTS: Initial €40k, annual license €70k -> 3-year TCO €250k.

Interpretation: Buy/COTS may be attractive for very quick TTM if the vendor fits, but long-term TCO may be higher due to licensing. If integration and customization needs are low, buy is favorable for speed.

Use-case nuances matter: if AI features are core differentiators, leaning into AI-assisted with careful governance can be the path to defendable differentiation.

6. Cost levers and sensitivity analysis

Key variables that swing TCO:

-

Model usage intensity: Token-based APIs can become expensive for high-volume features. Cache outputs, reduce token usage, and use smaller models for lower-risk tasks.

-

Engineering rates and efficiency: If you operate in a high-cost labor market, the relative savings from AI-assisted scaffolding are larger.

-

Verification overhead: High-assurance controls (HITL) can multiply recurring costs. Measure human review time per request.

-

Vendor licensing terms: Some vendors offer enterprise packages that cap per-request costs; negotiate predictable pricing.

-

Maintenance & technical debt: Generated code that is not refactored can increase future costs; policy must include refactor sprints.

A sensitivity table in the TCO template lets you vary model costs and human review hours to see breakeven points.

7. When to choose AI-assisted vs Traditional: a decision framework

Use the following checklist — if more than two answers point to AI-assisted, it’s likely the right approach; if more than two point to Traditional, prefer Traditional.

AI-assisted suitability signals

-

Need to prototype quickly (<6 months to MVP).

-

Feature is not critical for regulatory compliance.

-

The domain can tolerate occasional model uncertainty or includes verification layers.

-

Engineering bandwidth is constrained and speed is a priority.

-

The feature is not core IP or is easily refactorable.

Traditional suitability signals

-

High legal/regulatory risk (finance, healthcare).

-

Deterministic business logic with low tolerance for hallucination.

-

Long-term ownership of code as competitive advantage.

-

Low expected model usage or high-scale throughput where model costs would exceed labor.

Add nuance: hybrid paths exist — prototype with AI & refactor to traditional for production-critical paths.

8. Integration pitfalls and mitigation

AI brings practical integration pitfalls that affect economics and time-to-market:

Pitfall 1: Underestimating recurring model costs

Mitigation: Model cost forecasting, token budgeting, caching, and local model options for heavy workloads.

Pitfall 2: Not building verification & governance early

Mitigation: Add provenance logging and lightweight verification in the first iteration; build automation to enforce checks in CI.

Pitfall 3: Promoting prototype code to production without refactor

Mitigation: Reserve time/budget for production hardening and establish “refactor sprints” as part of roadmaps.

Pitfall 4: Vendor lock-in and sudden pricing changes

Mitigation: Implement vendor abstraction layers, multi-provider fallbacks, and cost alerts.

Pitfall 5: Not training teams on prompt engineering & operational practices

Mitigation: Run training, pair experienced prompt engineers with PMs, include prompt & model test cases in QA.

These pitfalls increase hidden costs and can erode apparent TCO benefits if left unaddressed.

9. Where AI agents fit the product roadmap — use cases and ROI

AI agents are autonomous or semi-autonomous software components that perform tasks on behalf of users — from scheduling assistants to support triage bots and automated incident responders. They are most valuable where tasks are:

-

Repetitive but contextual, requiring data access across systems.

-

Lengthy or multi-step, where orchestration reduces human time on task.

-

High-frequency with predictable actions, yielding clear labor savings.

High-impact agent examples

-

Customer support triage agent — reads support requests, gathers account context, drafts suggested replies, and routes complex cases to humans. ROI: reduces first-response time and human hours.

-

Sales assistant agent — prepares personalized outreach based on CRM data and call notes; automates routine follow-ups. ROI: increased conversion per SDR and reduced time per lead.

-

Ops incident summarizer — monitors logs and produces incident summaries with remediation steps for on-call engineers. ROI: reduces mean time to resolution (MTTR).

-

Procurement agent — drafts RFP responses, aggregates vendor quotes, and flags compliance issues. ROI: speeds procurement cycles and reduces manual coordination.

When agents are worth it

-

The agent saves X human hours per week such that annual labor savings > cost of agent development + model operations.

-

The agent reliably accesses canonical data sources (APIs, knowledge bases) and can be instrumented for verification.

-

The organization has governance and monitoring to catch incorrect or risky agent decisions.

ROI calculation (simple)

Estimate: Annual human cost saved (hours_saved_per_week * hourly_rate * 52) — Annual agent cost (model + infra + licensing + maintenance). If positive and strategic value exists, proceed.

Hands-on example: an agent that saves 10 hours/week at €50/hr = €26k/year savings. If agent OPEX = €10k/year and development amortized at €20k/year, ROI becomes negative in year 1 but may pay off over 3 years. Use the TCO template to model.

10. Roadmap recommendations for product teams and CTOs

To capture benefits while controlling risk, adopt a staged approach:

Stage 0: Foundation (0–3 months)

-

Create a cross-functional AI steering group (product, platform, security).

-

Build cost visibility: start tracking model usage, token costs, and feature-level spends.

-

Pilot 1 or 2 small internal automations to gain experience.

Stage 1: Platform & governance (3–9 months)

-

Build vendor abstraction and cost alerts.

-

Standardize provenance logging and CI gates for AI-origin code.

-

Create template agent scaffolds and orchestration primitives.

Stage 2: Scale (9–24 months)

-

Roll out composable AI services for product teams (retrieval, inference, policy).

-

Formalize refactor sprints: set criteria to convert AI prototype into production-grade code.

-

Expand agent library for high ROI use cases.

Stage 3: Optimization & differentiation (24+ months)

-

Evaluate on-prem or private model hosting for high-volume or sensitive workloads to reduce per-request costs.

-

Invest in personalization & federated learning where differentiation exists.

-

Continuously model TCO and adjust vendor contracts.

11. Measuring success: metrics to track

To manage economics and product outcomes, track:

-

Time-to-MVP (per feature): target reduction % compared to baseline.

-

Feature throughput: features delivered per quarter per team.

-

3-year TCO per product line.

-

Model spend / feature and token cost per user action.

-

Human hours saved (for agent use cases).

-

Production incidents attributable to AI origin code (risk metric).

-

Refactor ratio: proportion of AI-generated modules that were production-refactored within 6 months.

Use these KPIs in quarterly business reviews to decide whether to expand AI usage, reallocate budgets, or decommission features.

12. Practical checklists and takeaways

Quick decision checklist: Choose AI-assisted if:

-

You need speed > perfect determinism.

-

The feature can tolerate verification or human-in-the-loop.

-

You can budget recurring model costs.

-

You have a plan to refactor prototypes before long-term ownership.

Quick checklist: Avoid AI-assisted (prefer Traditional) when:

-

The feature is core IP and must be deterministic.

-

Regulatory compliance forbids probabilistic behavior.

-

Model costs exceed likely labor savings at scale.

Implementation checklist before shipping AI-driven feature:

-

TCO model completed for 3-year horizon (downloaded Excel template).

-

Verification & governance requirements defined and implemented.

-

Cost alerts and vendor abstraction in place.

-

Training on prompt engineering and operational practices for team.

-

Production monitoring and rollback mechanism configured.

Appendix A: Interpreting the charts and template (how to use them)

3-Year TCO chart: compares sample totals across Traditional, AI-assisted, and Buy/COTS. Use the template to replace default numbers with your salary bands, hosting costs, and model price quotes to get accurate forecasts.

Time-to-market vs Feature Velocity chart: shows typical Months to MVP (bar) and average feature throughput per quarter (line). Use it to reason about the tradeoff between speed and ongoing throughput.

Decision matrix & ROI scenarios table: these synthesize the logic and example numbers. Use them as a conversation starter in executive briefings.

Appendix B: Common vendor pricing models & negotiation tips

Vendor pricing can dramatically alter TCO. Patterns:

-

Per-token pricing: common for LLMs. Negotiate volume discounts and committed-use discounts.

-

Flat seat/license: common for enterprise platforms. Predictable but may not scale well per feature.

-

Hybrid: base subscription + per-volume usage. Good to cap costs with a negotiated tier.

-

On-prem licensing: upfront cost for private hosting; saves per-request costs but adds ops.

Negotiation tips:

-

Ask for cost transparency: unit costs, peak pricing, and overage rates.

-

Negotiate trial credits and performance SLAs.

-

Secure release rights and portability clauses if you plan to migrate models later.

-

Agree on data use: ensure prompts and customer data are not used for model retraining unless contracted.

Conclusion — an operational view for executives

AI reshapes the economics of custom software by rebalancing upfront labor with recurring model and governance costs. The decision to use AI-assisted development is not binary; it is a context-dependent optimization balancing TTM, TCO, and strategic differentiation. Use the TCO template to model your assumptions, place hardened verification and governance early in projects, and evolve product roadmaps that leverage agents where they deliver clear ROI. The organizations that win will be those that combine AI speed with operational discipline.

Companion: When to Use AI Agents in Your Product Roadmap (brief playbook)

AI agents are highest-yield when they automate multi-step, context-rich tasks and where the outputs are verifiable or supervised. Prioritize agent development for:

-

Repetitive knowledge work (triage, summarization).

-

High-frequency tasks with measurable labor cost.

-

Orchestration across systems where automation reduces human coordination overhead.

Before committing, model ROI: annual labor hours saved vs annual agent OPEX + amortized development.

Offer: If you want help mapping your product roadmap to agent-based use cases and producing an ROI-backed plan, book a 30-minute strategy call with us: Book a 30-min product strategy call (reply “Book call” and I will provide scheduling options and a prep checklist).

Recent Posts

- The Foundation Crisis: Why Hiring AI Specialists Before Data Engineers is Setting Companies Up for Failure

- ERP vs CRM vs Custom Platform: What Does Your Business Actually Need?

- The AI Enthusiasm Gap: Bridging Corporate Optimism with Public Skepticism in Enterprise AI Adoption

- The Future of AI-Powered Development: How Cursor Plans to Compete Against Tech Giants

- How Much Does Custom Software Development Cost in 2025? Real Numbers & Breakdown