- Services

- Artificial Intelligence Development

- Deep Learning & Neural Network Development Services

- Professional Machine Learning Development Services

- Enterprise Computer Vision Development Services

- Enterprise Natural Language Processing Development Services

- Chatbot & Conversational AI Development Services for Business

- Enterprise Computer Vision Solutions For Healthcare

- Transformative Healthcare AI Development

- Retail & E-commerce AI Solutions for Personalization & Growth

- AI Integration & MLOps Development Services

- AI Agent Development & Intelligent Automation

- Generative AI Solutions

- Outsourced Product Development

- Custom Software Development

- Software Customization & Integration

- Mobile App Development

- Custom Application Development

- Software Architecture Consulting

- Enterprise Application Development

- AI-Powered Documentation Services

- Product Requirements Document Services

- Artificial Intelligence Development

- Industries

- Healthcare Software Development

- Telemedicine Software Development

- Medical Software Development

- Electronic Medical Records

- EHR Software Development

- Remote Patient Monitoring Software Development

- Healthcare Mobile App Development Services

- Medical Device Software Development

- Healthcare Mobile App Development Services

- Patient Portal Development Services

- Practice Management Software Development

- Healthcare AI/ML Solutions

- Healthcare CRM Development

- Healthcare Data Analytics Solutions Development

- Hospital Management System Development | Custom HMS & Healthcare ERP

- Mental Health Software Development Services

- Medical Billing & RCM Software Development | Custom Healthcare Billing Solutions

- Laboratory Information Management System (LIMS) Development

- Clinical Trial Management Software Development

- Pharmacy Management Software Development

- Finance & Banking Software Development

- Retail & Ecommerce

- Fintech & Trading Software Development

- Online Dating

- eLearning & LMS

- Cloud Consulting Services

- Healthcare Software Development

- Technology

- Products

- About

- Contact Us

Best LLMs for Coding in 2025

AI assistants for software development moved from novelty to everyday tooling in 2023–2025. Today’s coding-focused LLMs no longer just spit out snippets — they integrate with IDEs, reason about multi-file repositories, generate tests, suggest fixes, and in some settings even submit pull requests. The core value is the same: make developers faster by automating repetitive work and surfacing ideas — but the best models now win by being context-aware, safe, and easy to operate at scale.

What coding LLMs actually do (short)

Modern coding LLMs combine several capabilities:

-

Autocomplete & generation — scaffold functions, classes, or full files from prompts or inline comments.

-

Context-aware suggestions — use repo files, docstrings, and test suites to produce tailored output.

-

Debugging & reasoning — explain failing tests, propose fixes, and generate unit tests.

-

Agentic workflows — chain calls, run tests, call external tools and adjust outputs iteratively for larger tasks.

Those capabilities are delivered either as cloud-hosted APIs (typical for enterprise-grade offerings) or as local/open-source models you can self-host if you need privacy, low latency, or offline operation. (See the comparison below for specifics.) The landscape is moving rapidly — vendors push specialized “code” variants, and open-source projects are catching up with large, fine-tuned models that can run locally.

Quick Tour of Strong Contenders

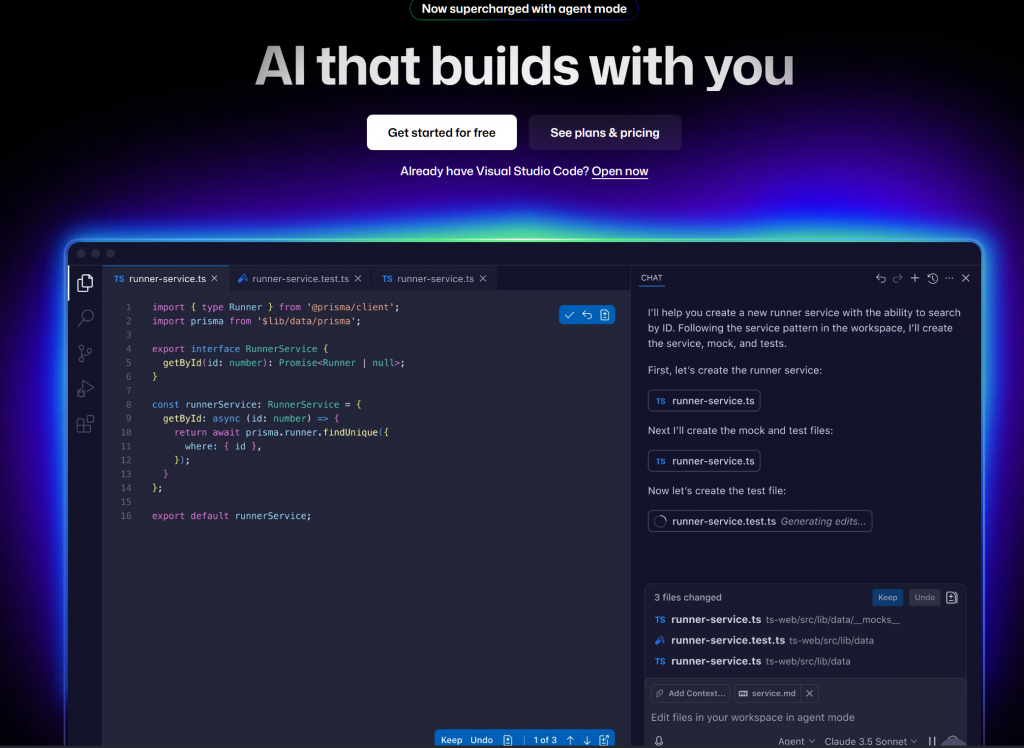

GitHub Copilot — best fit for teams and enterprises

Copilot combines deep IDE integration, repository awareness and enterprise controls. For many organizations it’s the default because it plugs directly into VS Code, JetBrains, and other editors and can be configured to exclude repositories from model training and to operate under enterprise policies. Copilot’s scale is meaningful — it has recorded tens of millions of signups and very broad enterprise adoption, which makes it hard to ignore for teams.

Where it shines: inline suggestions, repo-aware completions, enterprise tooling.

Limitations: subscription required, cloud-hosted (not for fully air-gapped environments).

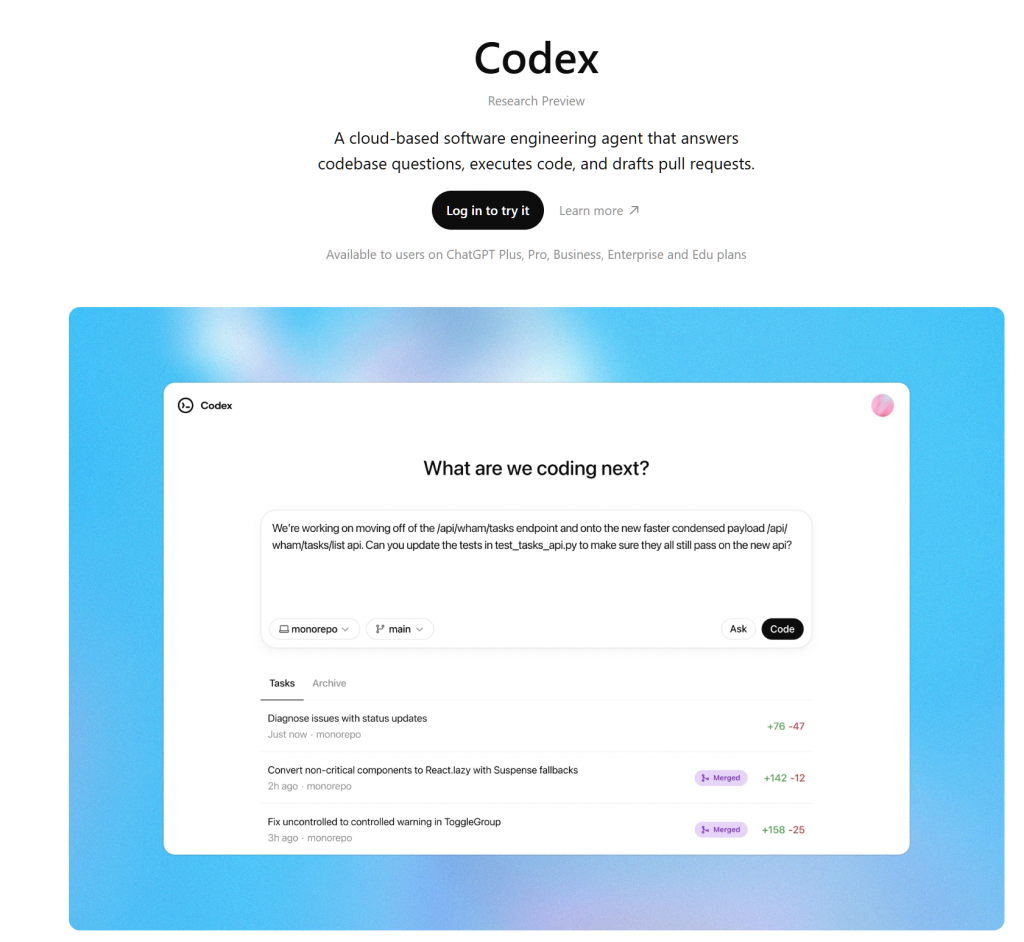

OpenAI (GPT family via Codex / Copilot integrations) — strongest for complex, multi-file tasks

OpenAI’s code-optimized variants (and the Codex lineage) remain among the most capable at generating multi-file systems, refactoring, and debugging complex stacks — especially when the toolchain (Codex, Copilot) is set up to evaluate outputs and run tests. OpenAI’s development cadence and ecosystem integrations frequently push new features for coding workflows.

Where it shines: complex code generation, debugging, agentic code workflows.

Limitations: cost per token can add up; cloud dependency for most offerings.

Qwen (Alibaba) — practical open-source alternative, locally hostable

Qwen’s code-specialized variants provide an attractive trade-off: high-quality code generation with open weights or permissive access models that make local deployment viable. That helps teams that want to run models behind their firewalls or customize the model on private code. Qwen-based models compete closely with closed models on many benchmarks and are evolving quickly.

Where it shines: local hosting, multilingual code coverage, cost control.

Limitations: fewer first-party IDE plugins (growing ecosystem).

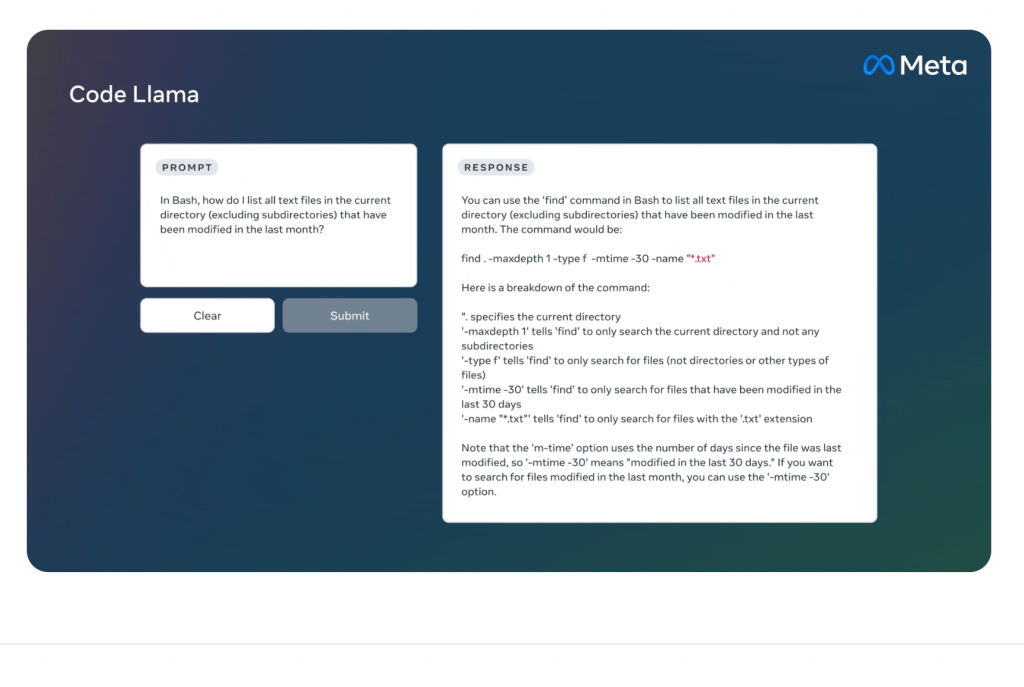

Code Llama (Meta) — open-source with permissive terms for code

Meta’s Code Llama is a mature open-source code model family that’s been optimized for code tasks and published in multiple sizes. It’s a good option for research teams and companies that need to tailor behavior or embed models into custom pipelines.

Where it shines: research, self-hosting, flexibility.

Limitations: depending on the size you choose, resource requirements vary.

Anthropic Claude (Sonnet / Claude family) — safety and reasoning-focused

Anthropic’s models emphasize safer outputs and robust reasoning which is useful for high-assurance code review and for workflows where guardrails matter. They’re typically offered as cloud-hosted managed services but have strong safety features and controllability.

Where it shines: safety-sensitive reviews, controlled behavior.

Limitations: cloud-only for most customers; cost considerations.

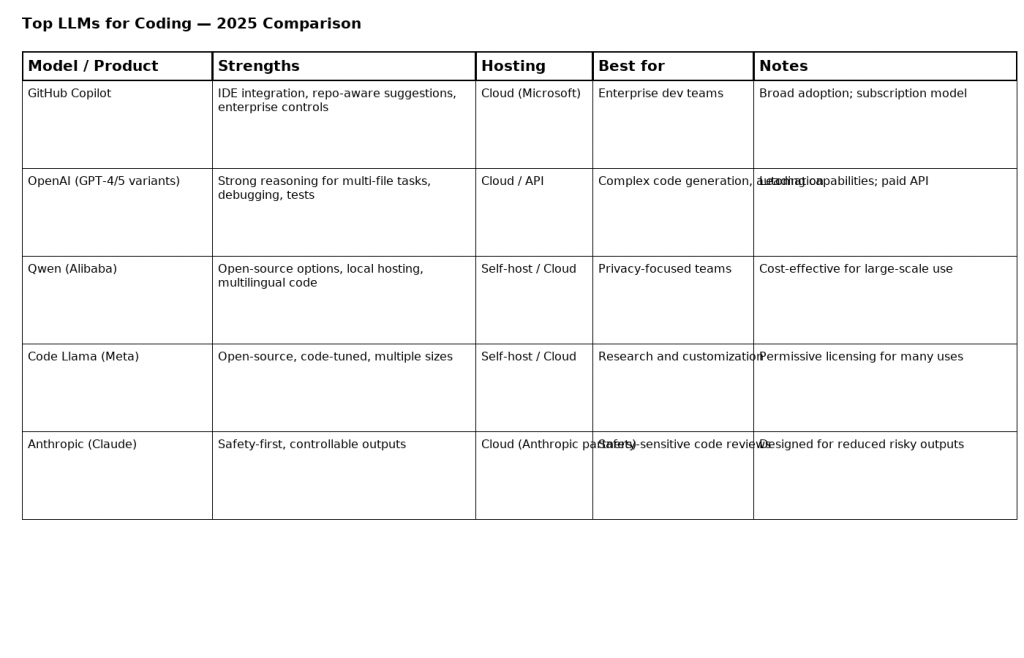

Compact comparison (summary)

(See the downloadable comparison PNG for a visually compact version — link below.)

-

Integration: Copilot > OpenAI Codex variants > third-party plugins for Qwen/Code Llama.

-

Self-hosting: Qwen & Code Llama >> OpenAI/Anthropic/Copilot (cloud-first).

-

Enterprise-readiness: Copilot & OpenAI lead (enterprise controls, billing, SLAs).

-

Safety & guardrails: Anthropic emphasizes safety; GitHub provides data-handling controls for business customers.

-

Cost: Open-source local models (Qwen/Code Llama) reduce API spend but increase infra costs; managed services charge per use.

Practical checklist — how to evaluate a coding LLM for your team

-

Decide hosting model: cloud vs local. If your codebase contains secrets or regulated data, prefer models that can run behind your firewall.

-

Check IDE integration: prefer tools with tight editor extensions for inline, repo-aware suggestions.

-

Confirm governance & training settings: can you opt out of model training and control data retention?

-

Measure with tests: use a small pilot with objective metrics — time-to-complete tasks, test pass rates, number of suggested fixes accepted.

-

Add CI/QA gates: require human review + tests for all AI-generated PRs.

Prognosis (what to expect through 2026)

-

Faster release cadence, specialization wins. Vendors will ship coding-specialist variants more frequently, and we’ll see more “agentic” models that can orchestrate tests, run linters, and fix issues autonomously. The trend toward frequent incremental developer-focused updates is already visible across the major providers.

-

Open-source models gain operational share. As Qwen and Code Llama families mature, more teams will adopt local hosting to avoid per-token costs and to meet compliance or latency requirements. Expect richer third-party IDE integrations for these open models in 2025–2026.

-

Enterprise standardization around a few platforms. Large organizations will standardize on one or two providers (Copilot/OpenAI + a local fallback) to balance productivity and governance — GitHub Copilot’s already-broad enterprise footprint makes it a natural anchor for many teams.

-

More emphasis on verification pipelines. Because LLMs will continue to make correct suggestions and occasional mistakes, engineering best practices will institutionalize: automatic unit-test generation, provenance tracking, license scanning, and mandatory human review for critical code paths.

-

Economic reshaping of developer work. AI augmentation will shift developer work toward higher-level architecture, integration, and review tasks. Some routine coding work may be automated, but demand for engineers who can evaluate, orchestrate, and secure AI-generated code will rise.

Recent Posts

- The Foundation Crisis: Why Hiring AI Specialists Before Data Engineers is Setting Companies Up for Failure

- ERP vs CRM vs Custom Platform: What Does Your Business Actually Need?

- The AI Enthusiasm Gap: Bridging Corporate Optimism with Public Skepticism in Enterprise AI Adoption

- The Future of AI-Powered Development: How Cursor Plans to Compete Against Tech Giants

- How Much Does Custom Software Development Cost in 2025? Real Numbers & Breakdown