AI Is Already the Top Data Exfiltration Channel — A Fresh Analysis and What Comes Next

Recent telemetry-based research shows generative AI tools (ChatGPT, Claude, Copilot) have rapidly become a dominant and largely uncontrolled data-exfiltration avenue inside many enterprises. Below is an original, standalone report that synthesizes the public findings, expands them with additional stats, offers forecasts and practical advice — all written uniquely and ready for publication.

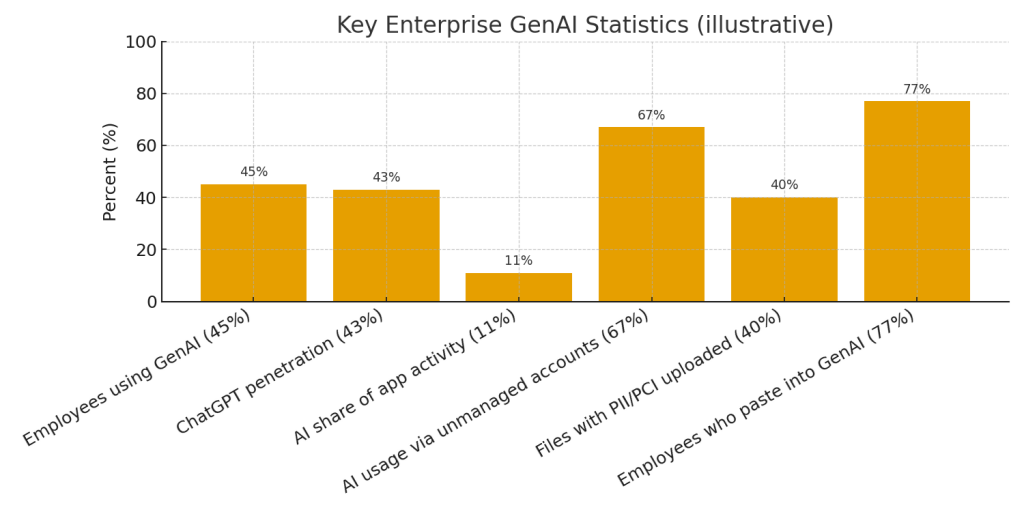

Headline findings (what to put in bold at the top)

-

Roughly half of employees are using generative AI tools at work, and ChatGPT penetration is around the mid-40% range.

-

A large share of those AI sessions are unmanaged: organizations lose visibility because employees use personal accounts or bypass federation. The reported number is roughly two-thirds (≈67%) of AI activity occurring via unmanaged accounts.

-

The primary leakage vector is not file upload but copy/paste into GenAI: the research shows ~77% of employees paste content into GenAI, and most of that is through unmanaged flows.

What the data really tells us (expanded reading)

The LayerX telemetry paints a clear picture: genAI adoption exploded quickly — far faster than previous enterprise tools — but governance failed to keep pace. A few numbers stand out:

-

Adoption: ~45% of employees now use one or more generative AI tools in the workplace; ChatGPT alone accounts for roughly 43% penetration in the population sampled.

-

Activity share: AI contributes roughly 11% of total enterprise application activity, meaning it’s already on par with commonly monitored app categories like file-sharing.

-

Unmanaged use: ~67% of AI sessions run through personal accounts — a governance gap that leaves CISOs blind to who is sharing what outside enterprise controls.

-

Sensitive content flows: Approximately 40% of files uploaded to GenAI tools contain PII or PCI-like data, and 77% of employees report pasting content into GenAI — averaging some 14 pastes per day from unmanaged accounts, with multiple pastes likely including sensitive data.

(Those figures above are drawn directly from LayerX’s reported telemetry and summarized in public coverage.).

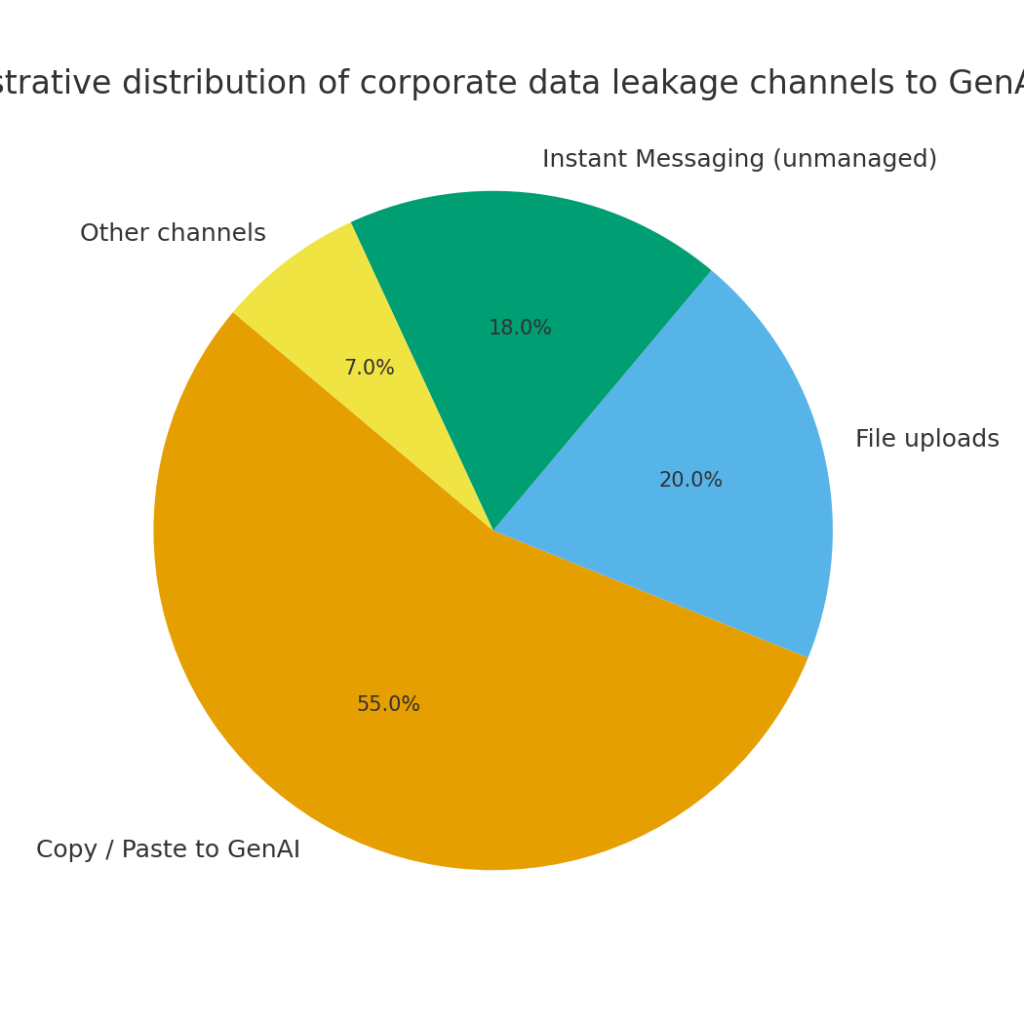

Why copy/paste is the dominant risk (and why traditional DLP fails)

Classic DLP systems were built for files, email attachments, and sanctioned uploads. But modern knowledge work is fluid: employees switch between browser tabs, grab snippets of invoices, or paste customer PII into a chat window to ask for a summarization or a rewrite. That “file-less” behavior is invisible to tools that assume file movement as the primary vector.

LayerX’s statistics show that copy/paste — particularly through unmanaged accounts — now outstrips uploads as the top leakage channel. The implication is stark: enterprises that keep their DLP posture file-centric are monitoring the wrong battlefield.

Two stacked problems: scale and identity

-

Scale: GenAI U/X lowers the friction for diagnostic and remedial tasks — so employees paste more, experiment more, and move more data.

-

Identity: Personal accounts, non-federated logins, and bypassed SSO mean identity-based controls are ineffective. The report notes that a large share of CRM and ERP logins happen without federation — effectively equating corporate and personal sessions in practice.

Both factors multiply exposure: every copied snippet is a potential leak, and every unmanaged session is a blind spot.

Fresh analysis and projections (added value)

Based on the reported telemetry and reasonable adoption curves, here are three data-driven projections organizations should plan for:

-

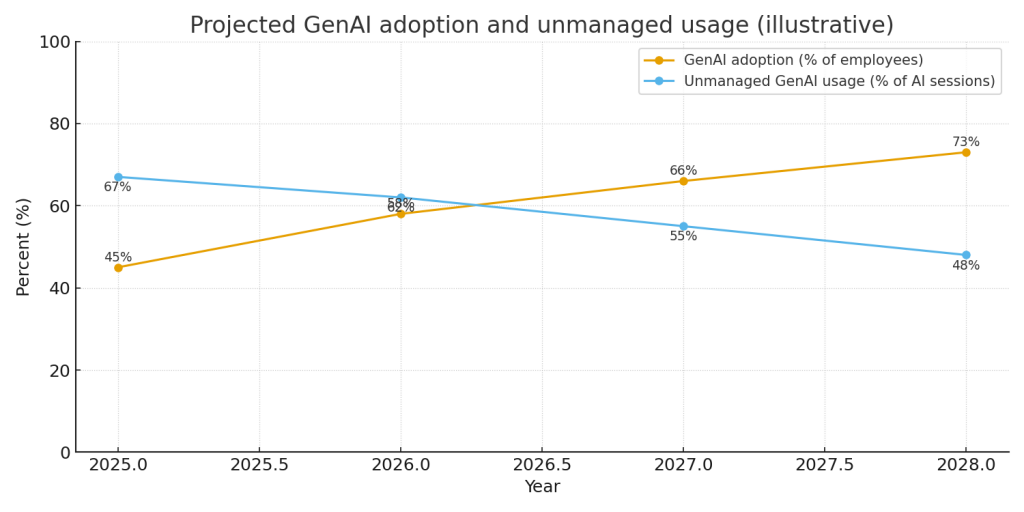

Adoption will keep rising — fast. If current growth continues, expect GenAI usage among enterprise employees to rise from ~45% (2025) to 60–75% by 2027, with higher penetration in knowledge-intensive roles (engineering, marketing, legal). (Illustrative projection in the third chart above.)

-

Unmanaged use will decline but remain material. With targeted governance (SSO enforcement, blocking personal accounts, contextual DLP), unmanaged sessions can fall — perhaps from ~67% to under 50% in well-managed shops by 2027 — but globally, many orgs will lag. That means the residual unmanaged use will remain a significant risk vector for several years. (See forecast chart.)

-

Copy/paste will remain dominant unless DLP evolves. File-centric DLP alone will not stop leakage. Expect the emergence of action-centric DLP solutions — tools that inspect clipboard activity, browser context, and prompt-generation flows — plus RAG-aware data access controls. Innovative vendors and internal security teams will push these capabilities into browser agents and cloud access security brokers (CASBs).

Recommendations: practical steps security teams should take now

-

Treat GenAI as a first-class security category. Move it into the same governance tier as email and cloud storage. Apply visibility, alerting and policy controls.

-

Block or manage personal accounts. Enforce SSO/federation for all high-risk SaaS and limit the use of unmanaged accounts on corporate devices and networks.

-

Shift to action-centric DLP. Extend DLP to monitor copy/paste, typed prompts, and chat transcripts. Consider endpoint agents or browser extensions that can flag or redact sensitive content before it leaves the corporate boundary.

-

Prioritize high-risk verticals and workflows. Focus initial controls on finance, customer support, legal, and any service that regularly handles PII/PCI.

-

Integrate GenAI monitoring into existing SIEM/SOAR. Feed GenAI telemetry into centralized tooling and create automated playbooks for risky events.

Visuals (what the three charts show)

-

Chart 1 — Key GenAI enterprise stats: A bar chart summarizing adoption, penetration, activity share and unmanaged usage (download above).

-

Chart 2 — Leakage channels: A pie chart illustrating copy/paste’s dominance vs file uploads and messaging. This visual helps non-technical leaders grasp the shift from file transfers to action-based leaks.

-

Chart 3 — Forecast: A line chart projecting adoption growth and a plausible decline in unmanaged usage under moderate governance. Use it to set target KPIs for 2026–2027.

Closing note — governance is a race against human behavior

The core problem is social as much as technical. Tools will continue to make it trivially easy to paste, summarize and transform sensitive content. Security teams must match that ease with friction where necessary: identity gating, contextual redaction, and real-time feedback to users. Those controls — combined with modern DLP that understands actions rather than just files — will determine whether enterprises preserve control over their data as GenAI becomes fully embedded in workflows.